In brief

- Decentralized data layer Walrus is aiming to provide a "verifiable data foundation for AI workflows" in conjunction with the Sui stack.

- The Sui stack includes data availability and provenance layer Walrus, offchain environment Nautilus and access control layer Seal.

- Several AI teams have already chosen Walrus as their verifiable data platform, with Walrus functioning as "the data layer in a much larger AI stack."

AI models are getting faster, larger, and more capable. But as their outputs begin to shape decisions in finance, healthcare, enterprise software, and beyond, an important question needs to be answered—can we actually verify the data and processes behind those outputs?

"Most AI systems rely on data pipelines that nobody outside the organization can independently verify," states Rebecca Simmonds, Managing Executive of the Walrus Foundation—a company which supports the development of decentralized data layer Walrus.

As she explains, there is no standard way to confirm where data came from, whether it was tampered with, or what was authorized for use in the pipeline. That gap doesn't just create compliance risk—it erodes trust in the outputs AI produces.

"It's about moving from 'trust us' to 'verify this,'" Simmonds said, "and that shift matters most in financial, legal, and regulated environments where auditability isn't optional."

Why centralized logs aren't enough

Many AI deployments today rely on centralized infrastructure and internal audit logs. While these can provide some visibility, they still require trust in the entity running the system.

External stakeholders have no choice but to trust that the records haven't been altered. With a decentralized data layer, integrity is anchored cryptographically, so independent parties can verify them without relying on a single operator.

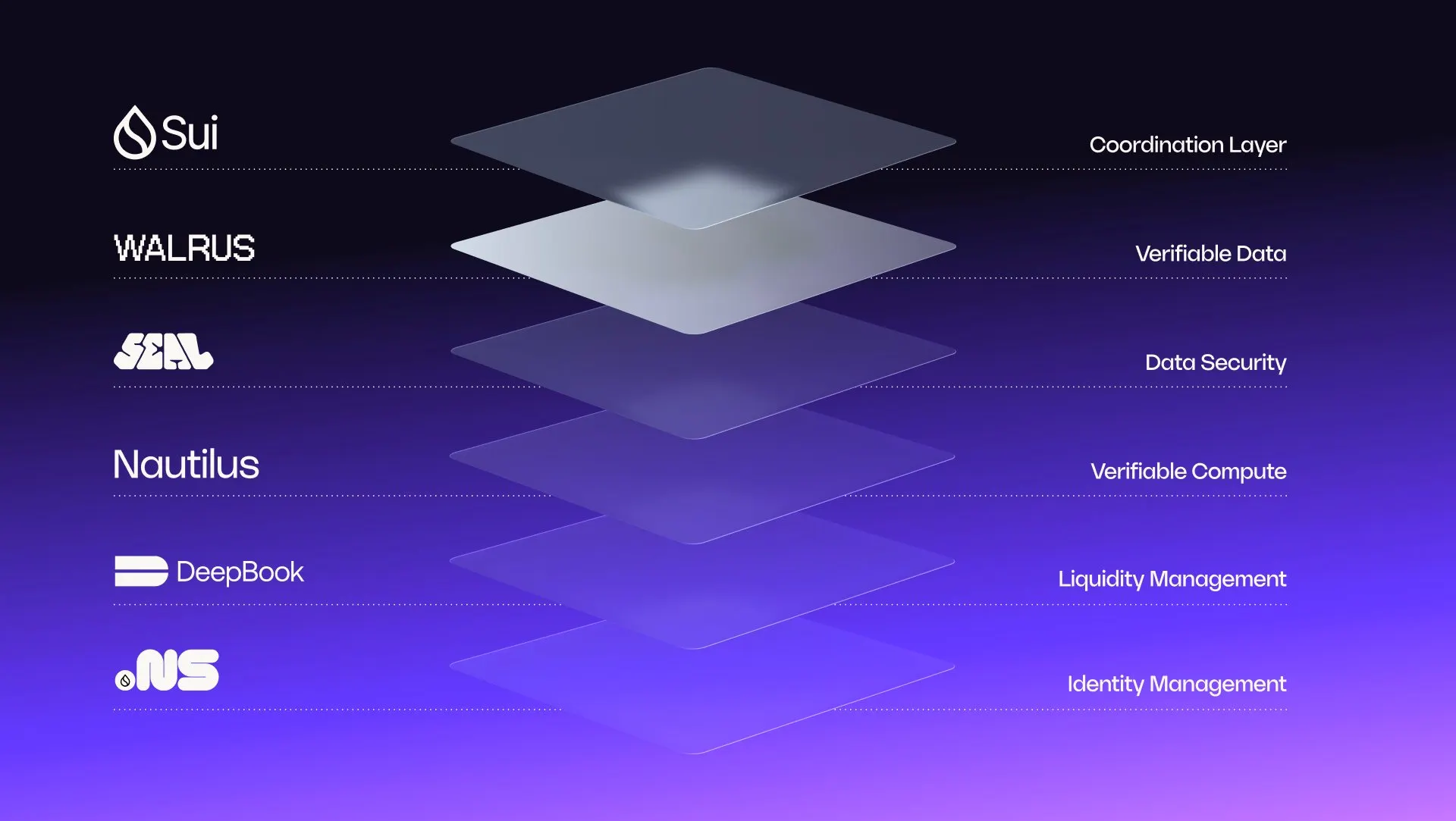

This is where Walrus positions itself, as the data foundation within a broader architecture referred to as the Sui Stack. Sui itself is a layer-1 blockchain network that records policy events and receipts onchain, coordinating access and logging verifiable activity across the stack.

"Walrus is the data availability and provenance layer—where each dataset gets a unique ID derived from its contents," Simmonds explained. "If the data changes by even a single byte, the ID changes. That makes it possible to verify that the data in a pipeline is exactly what it claims to be, hasn't been altered, and remains available."

Other components of the Sui Stack build on that foundation. Nautilus lets developers run AI workloads in a secure offchain environment and generate proofs that can be checked onchain, while Seal handles access control, letting teams define and enforce who can see or decrypt data, and under what conditions.

"Sui then ties everything together by recording the rules and proofs onchain,” Simmonds said “That gives developers, auditors, and users a shared record they can independently check."

"No single layer solves the full AI trust problem," she added. "But together, they form something important: a verifiable data foundation for AI workflows—data with provable provenance, access you can enforce, computation you can attest to, and an immutable record of how everything was used."

Several AI teams have already chosen Walrus as their verifiable data platform, Simmonds said, including open-source AI agent platform elizaOS, and blockchain-native AI intelligence platform Zark Lab.

Autonomous agents making financial decisions on unverifiable data. Think about that for a second.

With Walrus, datasets, models, and content are verifiable by default, so builders can secure AI platforms from potential regulatory non-compliance, inaccurate responses, and erosion…

— Walrus 🦭/acc (@WalrusProtocol) February 18, 2026

Verifiable, not infallible

The phrase "verifiable AI" can sound ambitious. But Simmonds is careful about what it does—and doesn't—imply.

"Verifiable AI doesn't explain how a model reasons or guarantee the truth of its outputs," she said. But it can "anchor workflows to datasets with provable provenance, integrity, and availability." Instead of relying on vendor claims, she explained, teams can point to a cryptographic record of what data was available and authorized. When data is stored with content-derived identifiers, every modification produces a new, traceable version—allowing independent parties to confirm what inputs were used and how they were handled.

This distinction is crucial. Verifiability isn't about promising perfect results. It's about making the lifecycle of data—how it was stored, accessed, and modified—transparent and auditable. And as AI systems move into regulated or high-stakes environments, this transparency becomes increasingly important.

Why does @WalrusProtocol exist.

Because businesses that need programmable storage with verifiable data integrity and guaranteed availability had nowhere to go.

We built it and they keep showing up. Simple as that!! pic.twitter.com/Ygxe8CFenh

— rebecca simmonds 🦭/acc (@RJ_Simmonds) February 12, 2026

"Finance is a pressing use case," Simmonds said, where “small data errors” can turn into real losses thanks to opaque data pipelines.“Being able to prove data provenance and integrity across those pipelines is a meaningful step toward the kind of trust these systems demand," she said, adding that it “isn't limited to finance. Any domain where decisions have consequences— healthcare, legal—benefits from infrastructure that can show what data was available and authorized."

A practical starting point

For teams interested in experimenting with verifiable infrastructure, Simmonds suggests starting with the data layer as a “first step” rather than attempting a wholesale overhaul.

"Many AI deployments rely on centralized storage that's really difficult for external stakeholders to independently audit,” she said. “By moving critical datasets onto content-addressed storage like Walrus, organizations can establish verifiable data provenance and availability—which is the foundation everything else builds on."

In the coming year, one of the focuses for Walrus is expanding the partners and builders on the platform. "Some of the most exciting stuff is what we're seeing developers build—from decentralized AI agent memory systems to new tools for prototyping and publishing on verifiable infrastructure,” she said. “In many ways, the community is leading the charge, organically."

"We see Walrus as the data layer in a much larger AI stack," Simmonds added. "We're not trying to be the whole answer—we're building the verifiable foundation that the rest of the stack depends on. When that layer is right, new kinds of AI workflows become possible."

Brought to you by Walrus

Learn More about partnering with Decrypt.