Microsoft today claimed that it has released “the most capable and cost-effective small language models (SLMs) available,” saying Phi-3—the third iteration of its Phi family of Small Language Models (SLMs)—outperforms comparably-sized models and a few larger ones.

A Small Language Model (SLM) is a type of AI model that is designed to be extremely efficient at performing specific language-related tasks. Unlike Large Language Models (LLMs), which are well suited for a wide range of generic tasks, SLMs are built upon a smaller dataset to make them more efficient and cost-effective for specific use cases.

Phi-3 comes in different versions, Microsoft explained, with the smallest being Phi-3 Mini, a 3.8 billion parameter model trained on 3.3 trillion tokens. Despite its comparatively small size—Llama-3’s corpus weighs in over 15 trillion tokens of data—Phi-3 Mini is still capable of handling 128K tokens of context. This makes it comparable to GPT-4 and beats Llama-3 and Mistral Large in terms of token capacity.

In other words, AI behemoths like Llama-3 on Meta.ai and Mistral Large could collapse after a long chat or prompt well before this lightweight model begins to struggle.

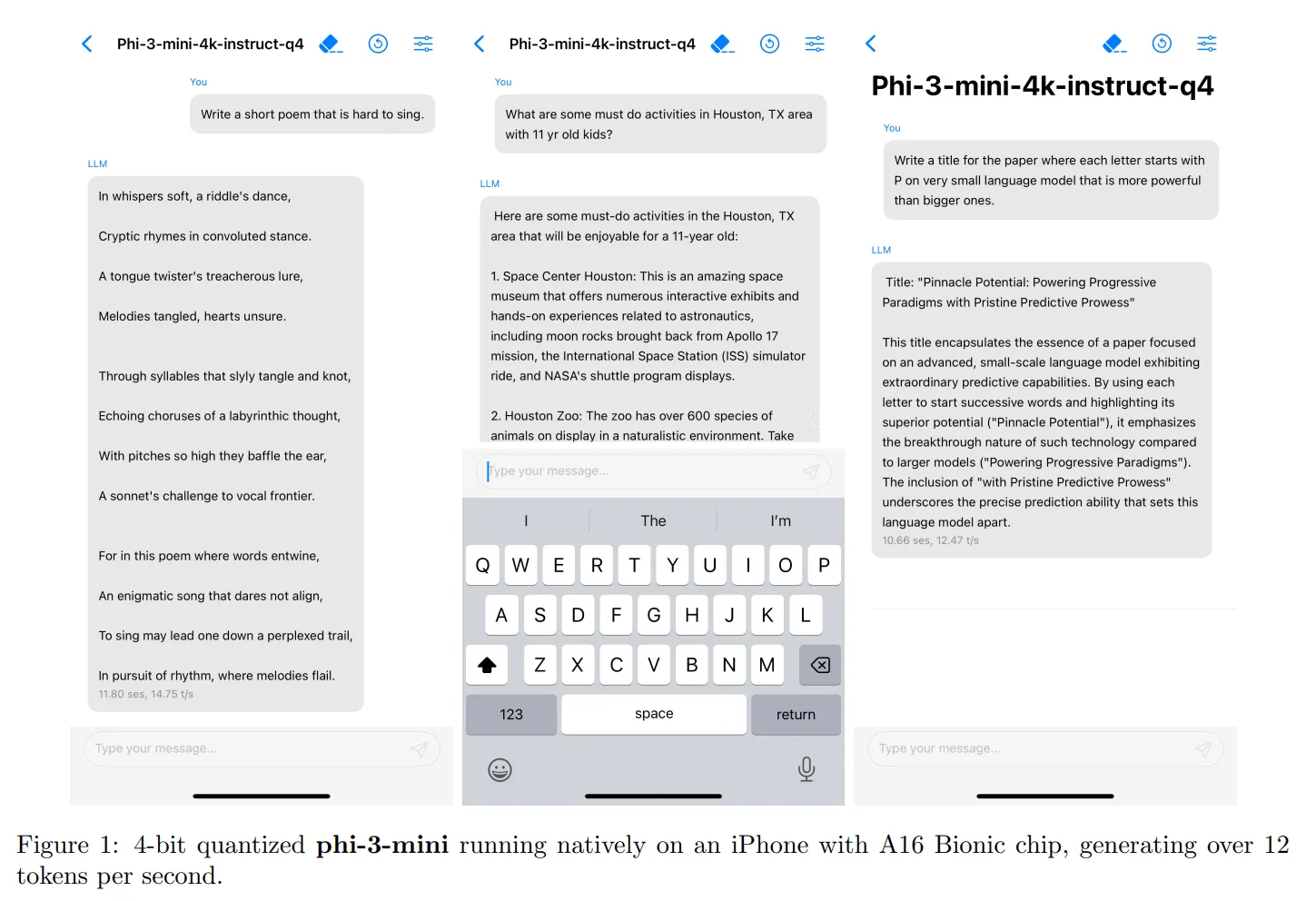

One of the most significant advantages of Phi-3 Mini is its ability to fit and run on a typical smartphone. Microsoft tested the model on an iPhone 14, and it ran with no issues, generating 14 tokens per second. Running Phi-3 Mini requires only 1.8GB of VRAM, making it a lightweight and efficient alternative for users with more focused requirements.

While Phi-3 Mini may not be as suitable for high-end coders or people with broad requirements, it can be an effective alternative for users with specific needs. For example, startups that need a chatbot or people leveraging LLMs for data analysis can use Phi-3 Mini for tasks like data organization, extracting information, doing math reasoning, and building agents. If the model is given internet access, it can become pretty powerful, compensating for its lack of capabilities with real-time information.

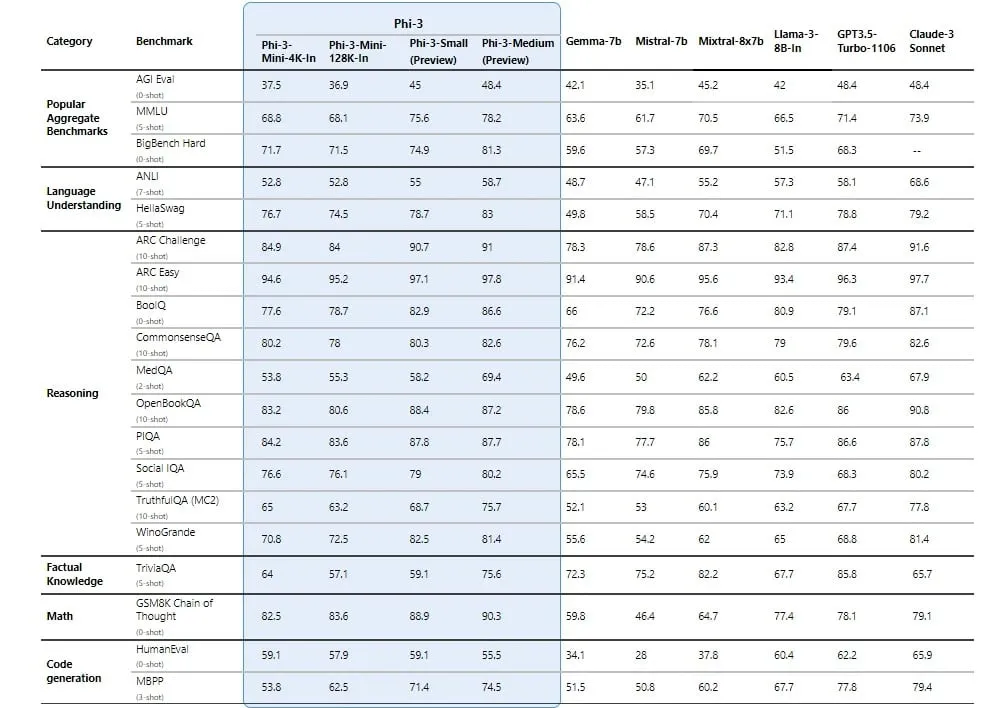

Phi-3 Mini achieves high test scores due to Microsoft's focus on curating its dataset with the most useful information possible. The broader Phi family, in fact, is not good for tasks that require factual knowledge, but high reasoning skills position them above major competitors. Phi-3 Medium (a 14-billion parameter model) consistently beats powerful LLMs like GPT-3.5 —the LLM powering the free version of ChatGPT— and the Mini version beats powerful models like Mixtral-8x7B in the majority of the synthetic benchmarks.

It's worth noting, however, that Phi-3 is not open source like its predecessor, Phi-2. Instead, it is an open model, meaning it is accessible and available for use, but it does not have the same open source licensing as Phi-2, which allows for broader usage and commercial applications.

In the coming weeks, Microsoft said it will release more models in the Phi-3 family, including Phi-3 Small (7 billion parameters) and the aforementioned Phi-3 Medium.

Microsoft has made Phi-3 Mini available on Azure AI Studio, Hugging Face, and Ollama. The model is instruction-tuned and optimized for ONNX Runtime with support for Windows DirectML, as well as cross-platform support across different GPU, CPU, and even mobile hardware.