Americans feel apprehensive about the growing use of artificial intelligence (AI) in hiring and evaluating workers, according to a new study released by the Pew Research Center.

Pew Research surveyed 11,004 U.S. adults in mid-December of 2022, asking participants for their views on AI's impact on the workforce. While some respondents acknowledged the efficiency of AI-driven recruitment, many expressed fears that the technology might invade privacy, impact evaluations, and lead to job losses.

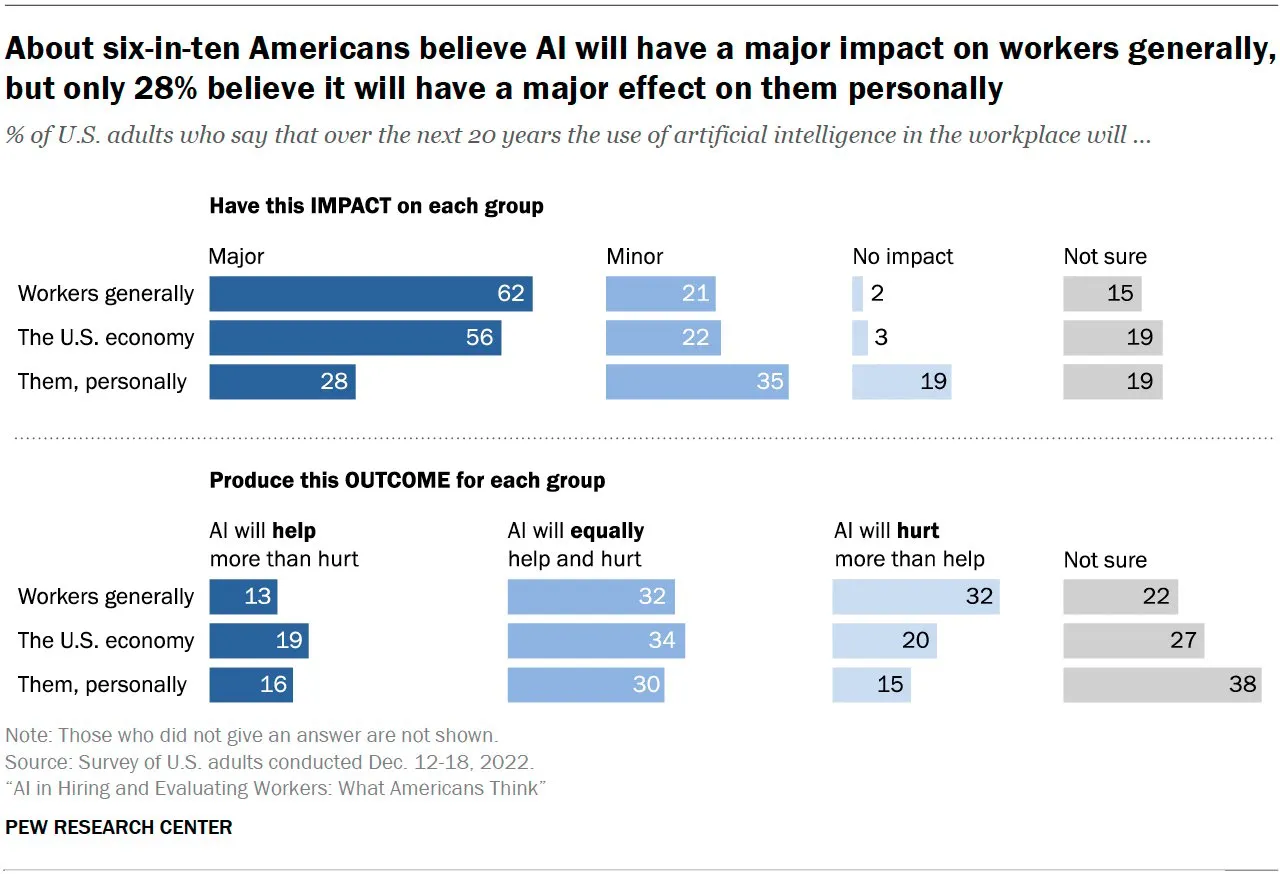

According to the study, published on Thursday, 32% of Americans believe that AI in hiring and evaluating workers is more likely to harm rather than help job applicants and employees.

Seventy-one percent of US citizens are against the idea of using AI to decide whether to hire or fire someone. On the other hand, the study found that 40% of Americans still think AI can provide benefits to job applicants and employees by speeding up hiring processes, reducing human errors, and eliminating potential biases inherent in human decision-making. Some respondents also highlighted the potential of AI-driven performance evaluations to provide a more objective and consistent assessment of workers' skills and productivity.

The research reveals that 32% of Americans believe that over the next 20 years, AI will do more damage than good to workers, with just 13% showing an optimistic point of view, with almost two thirds of the respondents saying that they would not apply for a job if they knew they were going to be evaluated by an Artificial Intelligence.

These concerns extend to various aspects of the hiring process, from resume screening and applicant evaluation to performance monitoring and personnel decisions. The report highlights that the majority of participants worry that AI systems will infringe on their privacy by collecting too much personal information, such as browsing history or social media activity. Ninety percent of upper-class workers, 84% of middle-class workers, and 70% of lower-class workers have concerns of being “inappropriately surveilled if AI were used to collect and analyze information,” the study says.

Addressing Concerns: Policy, Transparency, and Education

As AI continues to make inroads into the workforce, tech industry leaders have been pushing for policymakers, businesses, and developers to address the public's concerns. In the European Union, for example, regulators have tried to prevent potential misuse by called for transparency in AI systems, education, and training for workers to adapt to a rapidly changing job landscape. Some of the most famous minds in the AI industry have called for a pause in the training of more advanced models in an effort to tackle these issues before it’s too late.

Meanwhile, regulators have begun to pay attention to how these artificial intelligence models are trained and how they could affect citizens' rights. The first step was taken by Italy when it banned the use of ChatGPT in the country on the grounds that it could be illegally collecting data from its users and exposing minors to inappropriate interactions.

Other European countries have also expressed similar concerns, especially because artificial intelligence models are particularly useful if they are properly trained—which requires large amounts of data.

AI's growing role in the workplace presents both benefits and concerns, such as privacy, fairness, and discrimination. By taking a proactive approach to policy, transparency, and education, politicians are looking to guarantee that AI serves as a force for good——assuring that AI models will be good bosses is not something they have on their minds right now.