Stability AI, a leading company in the field of artificial intelligence, has just released the latest generation of its open-source image generator, Stable Diffusion 3 (SD3). This model is the most powerful open-source, customizable text-to-image generator to date.

SD3l is released under a free non-commercial license and is available via Hugging Face. It is also available on Stability AI's API and applications, including Stable Assistant and Stable Artisan. Commercial users are encouraged to contact Stability AI for licensing details.

"Stable Diffusion 3 Medium is Stability AI’s most advanced text-to-image open model yet, comprising two billion parameters,” Stability AI said in an official statement, “the smaller size of this model makes it perfect for running on consumer PCs and laptops as well as enterprise-tier GPUs. It is suitably sized to become the next standard in text-to-image models.”

Decrypt got access to the model and did a few test generations. The usual ComfyUI workflows compatible with SD1.5 and SDXL don't work with SD3. Right now, the easiest way to run it is via StableSwarmUI. There is a post in Reddit explaining how to do that.

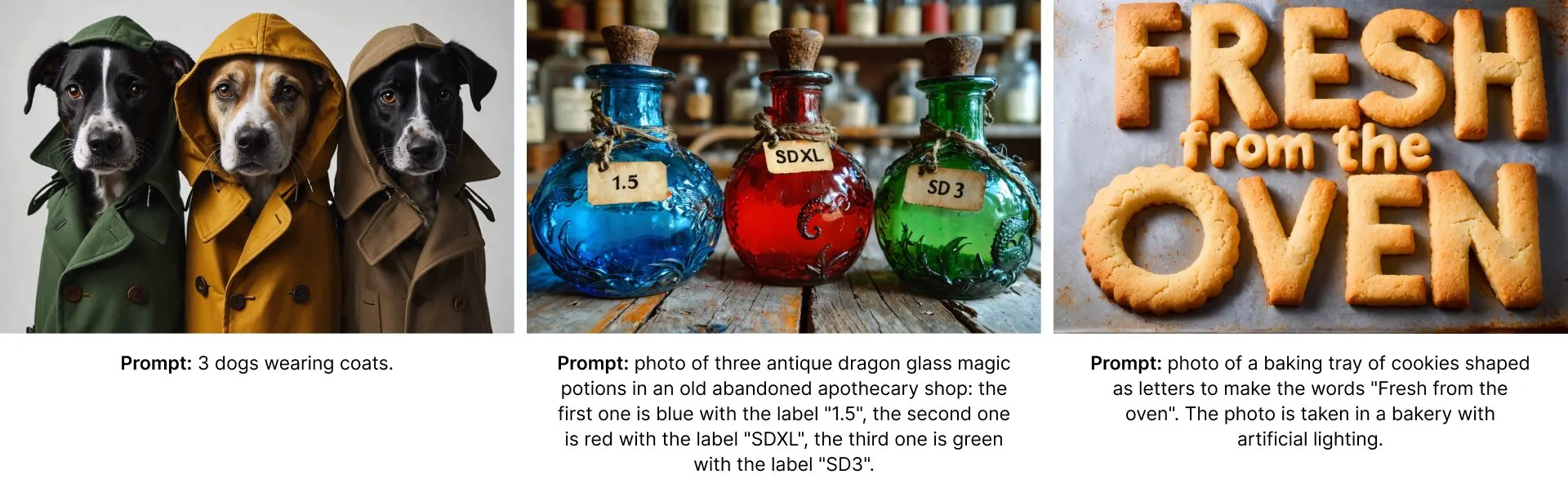

The first generations were really good even for the smaller model. The results looked pretty realistic and detailed, clearly superior to those from the original SDXL and comparable to the most recent customized SDXL checkpoints.

Really enjoying the new SD3. Running it on my potato-ish RTX2060 GPT with 6GB of vRAM. Around 45 seconds per generation vs 30 of SDXL.https://t.co/t2RZaXNSVu pic.twitter.com/mPJAQRZubA

— jaldps (@jaldpsd) June 12, 2024

The model's key features include photorealism, prompt adherence, typography, resource-efficiency, and fine-tuning capabilities. It overcomes common artifacts in hands and faces, delivering high-quality images without the need for complex workflows. The model also comprehends complex prompts involving spatial relationships, compositional elements, actions, and styles. It's remarkably accomplished at generating text without artifacting and spelling errors, thanks to Stability AI's Diffusion Transformer architecture. The model is capable of absorbing nuanced details from small datasets, making it perfect for customization.

The model was first unveiled in February 2024, and was made available via API in April 2024.

Stability AI has collaborated with Nvidia to enhance the performance of all Stable Diffusion models. The TensorRT-optimized versions of the model will provide best-in-class performance, with past optimisations yielding up to a 50% increase in performance.

Stability AI conducted internal and external testing, as well as the implementation of numerous safeguards to prevent the misuse of SD3 Medium by bad actors.

According to a spokesperson from Stability AI, the minimum hardware requirements to run SD3 range from 5GB to 16GB of GPU VRAM, depending on the specific model and its size. SD3 uses a different encoding technology in this model, so it can generate better images and have a better understanding of text prompts. It will also be capable of generating text but will require large amounts of computational power.

“For SD3 Medium (2 billion parameters) we recommend 16GB of GPU VRAM for higher speed, but folks with lower VRAM can still run it with a minimum of 5GB of GPU VRAM," Stability AI told Decrypt. The firm added that, "SD3 has a modular structure, allowing it to work with all 3 Text Encoders, with smaller versions of the 3 Text Encoders or with just a subset of them. Much of the VRAM is used for the text encoders. There is also the possibility of running the biggest Text Encoder, which is T5-XXL, in CPU. This means that the minimum requirements to run SD3 2B are between SD1.5 and SDXL requirements. For fine tuning that also depends on how you handle Text Encoders. Assuming you preprocess your dataset and then you unload the encoders, the requirements are around the same of SDXL using the same method.”

Stability added that “there is no need for a refiner." This feature simplifies the generation process and enhances the overall performance of the model. SDXL introduced this feature by releasing two models that were supposed to run one after another. The base model generated the overall image and the refiner made sure to add the little details. However, the Stable Diffusion community quickly ditched the refiner and fine tuned the base model, making it capable of generating detailed images on its own.

For some examples of what custom SDXL models are capable of generating right now without detailers, we have a guide with photorealistic generations.

Despite controversy around the company’s finances and its future, Stability made sure to let us know this won’t likely be its last rodeo. "Stability is actively iterating on improving our image models as well as focusing on our multimodal efforts across video, audio & language," the spokesperson said.

Beyond Stable Diffusion, Stability AI has released open source models for video, text and audio. It also has other image generation technologies like Stable Cascade and Deepfloyd IF. Stability AI plans to continuously improve SD3 Medium based on user feedback.

“Our goal is to set a new standard for creativity in AI-generated art and make Stable Diffusion 3 Medium a vital tool for professionals and hobbyists alike.” Stability AI said.