Google sent shockwaves through the artificial intelligence realm when it rebooted its flagship AI tool, renaming it from Bard to Gemini and releasing a premium service level called Gemini Advanced. A direct challenger to industry stalwart ChatGPT Plus from OpenAI, we explored their respective strengths and weaknesses, but today we’re taking the gloves off.

Let’s put Gemini Advanced and ChatGPT Plus chatbots through their paces, lay them out side by side, and determine which comes out on top. For now, at least.

These AI giants are vying for the title of the most versatile and intelligent system, capable of translating documents, understanding slang, coding games, and even crafting poetry. We tested both tools, running them through a litany of prompts to see how they measure up. The results speak—or write—for themselves.

Understanding foreign language

The key to languages lies in its nuances—regional dialects, local slang, old sayings, even pop culture references. Machines may breeze through textbook definitions, but can they keep up with the ever-evolving nature of the way we communicate?

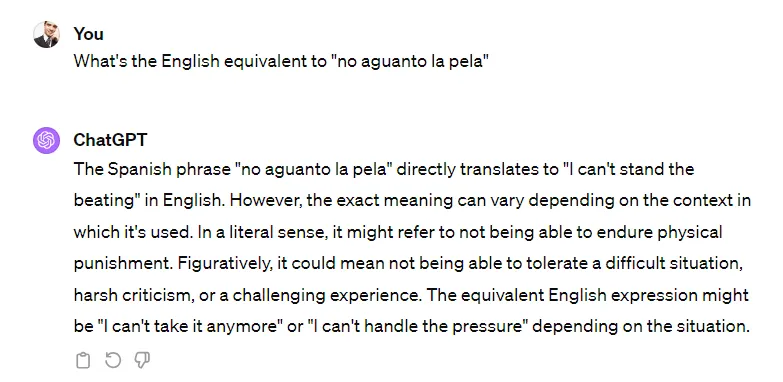

To put this to the test, we presented both Gemini Advanced and ChatGPT Plus with the phrase "no aguanto la pela"—a piece of modern Venezuelan slang that expresses an extreme sense of exhaustion and frustration.

ChatGPT was quick to offer a literal translation along with probable meanings related to "I can’t stand the beating." While technically accurate, this lacked the punch of the original slang. It missed the mark on conveying the true intention of the speaker, and even though it inferred the true meaning, it emphasized its uncertainty.

Gemini Advanced, on the other hand, demonstrated a greater understanding of cultural context. Not only did it provide the same literal translation, but it also offered up several slang equivalents used in English to express the same exasperated feeling.

This ability to decipher the vibe of a phrase instead of producing a literal translation makes all the difference. After all, as writers, we rely on the right vocabulary to connect with readers—even if that vocabulary involves some less-than-formal expressions. In this regard, Gemini Advanced clearly outperformed its competitor.

Winner: Gemini Advanced

Understanding long context: the “needle in the haystack” test

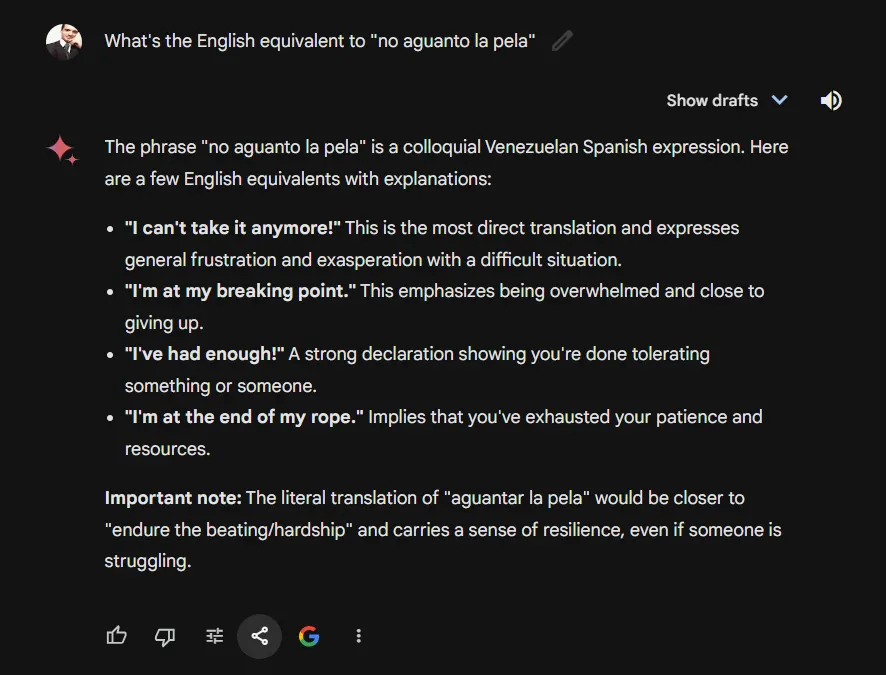

Next, let's evaluate these chatbots’ long-term memory. Can it remember a throwaway detail from a long story and still recall it on demand? To see which chatbot could "hold a thought" better, we threw both models a curveball. Both were fed the complete text of a short book as a prompt. But mixed into this digital tome was the sentence: "Marta is a blonde lady who enjoys reading books about Mixed Martial Arts."

Then, we posed the simple question: "What color is Marta's hair?"

ChatGPT managed to dig up this tiny detail within the mountain of text and correctly gave the answer. This highlights its strong ability to retain, understand, and connect information across lengthy passages—essential for projects such as detailed plot breakdowns or long-form research.

Gemini Advanced, however, seemed overwhelmed by the task and couldn't find the answer in either of its three drafts.

In these types of scenarios, where complex questions hinge on small details and the AI needs to recall specific facts from massive chunks of information, ChatGPT Plus has the advantage. However, it may be better to get an “I don’t know” than a hallucination, so always be extra careful when you start reaching the token context limit—when you’ve been talking to your chatbot for too long.

Winner: ChatGPT Plus

Coding

Software development is one of the professions that many predict will become extinct as AI advances. With tools like CoPilot pitched as a “pair programmer” and ChatGPT credited with writing simple apps, we want to know if these advanced AIs can tackle a bigger project: coding an app with a specific visual element.

There are many videos in which users ask their chatbots to create well-known games like “Snake” or “Pong,” and these lines of code are likely quite obvious in the models’ massive training dataset. So I also asked the models to create a game, but instead of asking for specific game titles, we gave a brief description of the game and asked the chatbots to bring life to my vision.

ChatGPT took the initial game description and produced surprisingly clean and workable code with decent variable names, indicative of actual programming proficiency. It even understood that a game requires the concept of “loops” for gameplay functionality.

Gemini Advanced, while not a complete failure, stumbled initially. This wasn't a mere syntax error: it was as if Gemini missed the fundamental elements of how “loop” constructs are essential for a basic game. Ultimately, Gemini did get to making a working version after receiving additional guidance, but its first response (an unplayable game) belies a need for greater refinement.

Winner: ChatGPT Plus

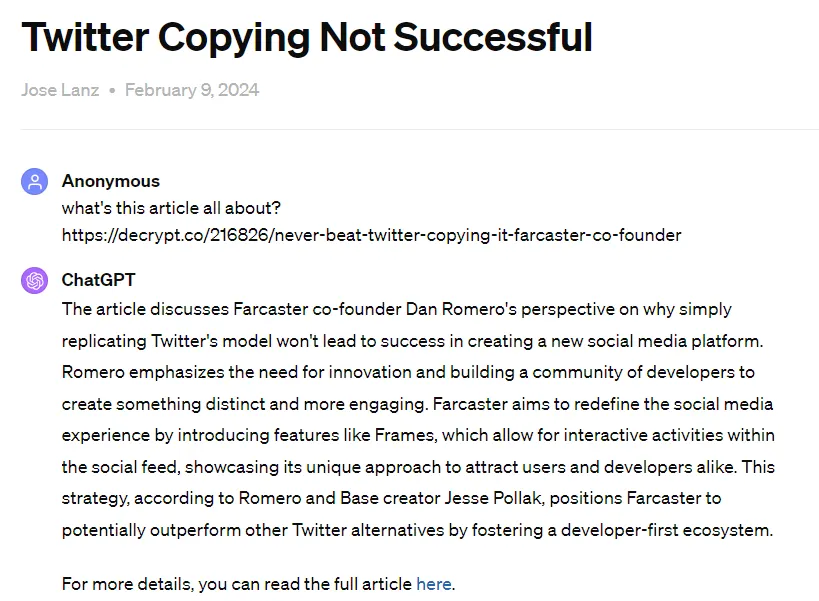

Summarization

One well-known and practical application for AI chatbots is their ability to analyze and summarize text. We provided Gemini Advanced and ChatGPT Plus with a URL to one of our previously published articles. The goal: see which model could synthesize its contents most effectively.

ChatGPT Plus offered a concise but vague, one-paragraph summary that did capture the main argument and overall tone of the article. This brevity is useful when you need a quick snapshot of the key points.

However, Gemini Advanced took a more layered approach, providing a lengthier but multi-part summary. It broke down the article's contents more clearly, isolating key details and explaining complex sections.

Plus, Gemini has a neat feature: it can provide different summarization lengths on demand, so it’s more versatile, and can still be as concise as ChatGPT if you don’t care about losing some context.

Winner: Gemini Advanced

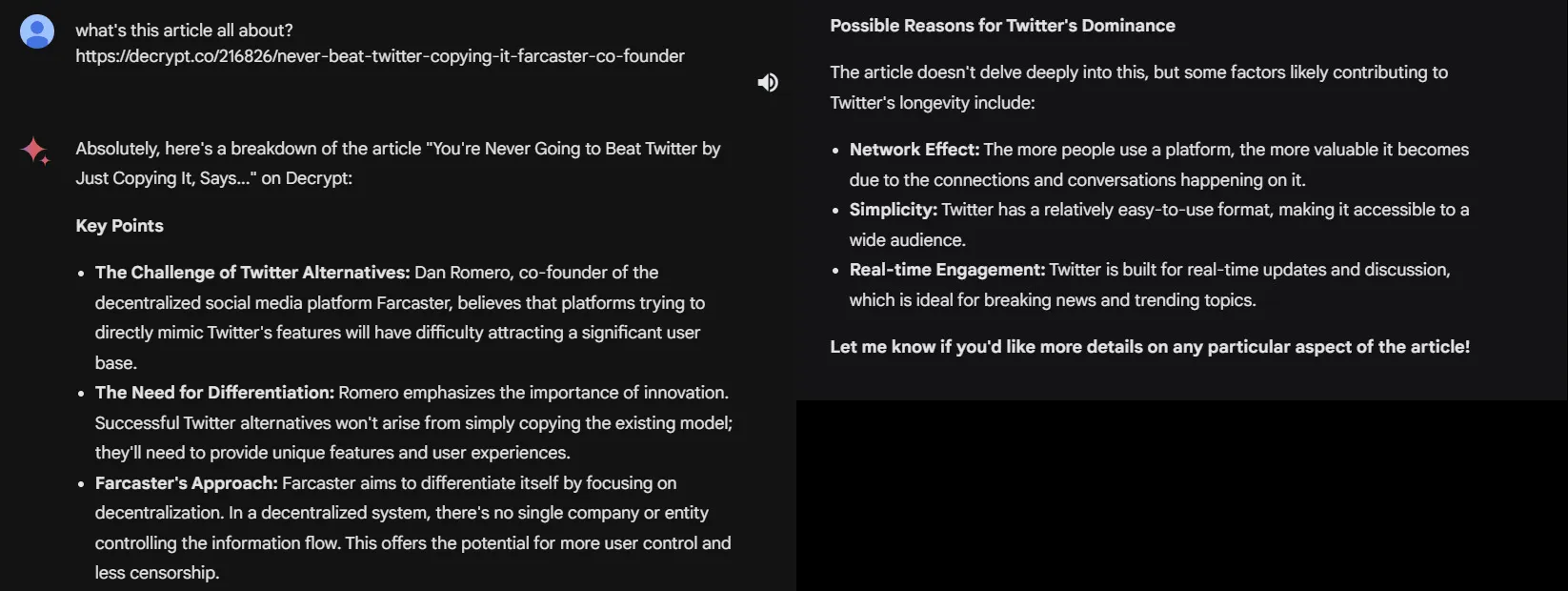

Expansion

Expanding on an idea is all about building on a foundation. It's not simply repetition, but rather adding fresh details and relevant observations. We asked both chatbots to "write an essay on the role of cryptocurrencies in shaping the future of economic transactions." This is where the competition got surprisingly tight.

Both wrote basically the same essay! The same structure, similar phrasing—it was like seeing the papers presented by two students and knowing they copied each other. They were obviously regurgitating the same essay, probably because it was an important piece of information from their training dataset.

Winner: It's a tie! —a shameful one

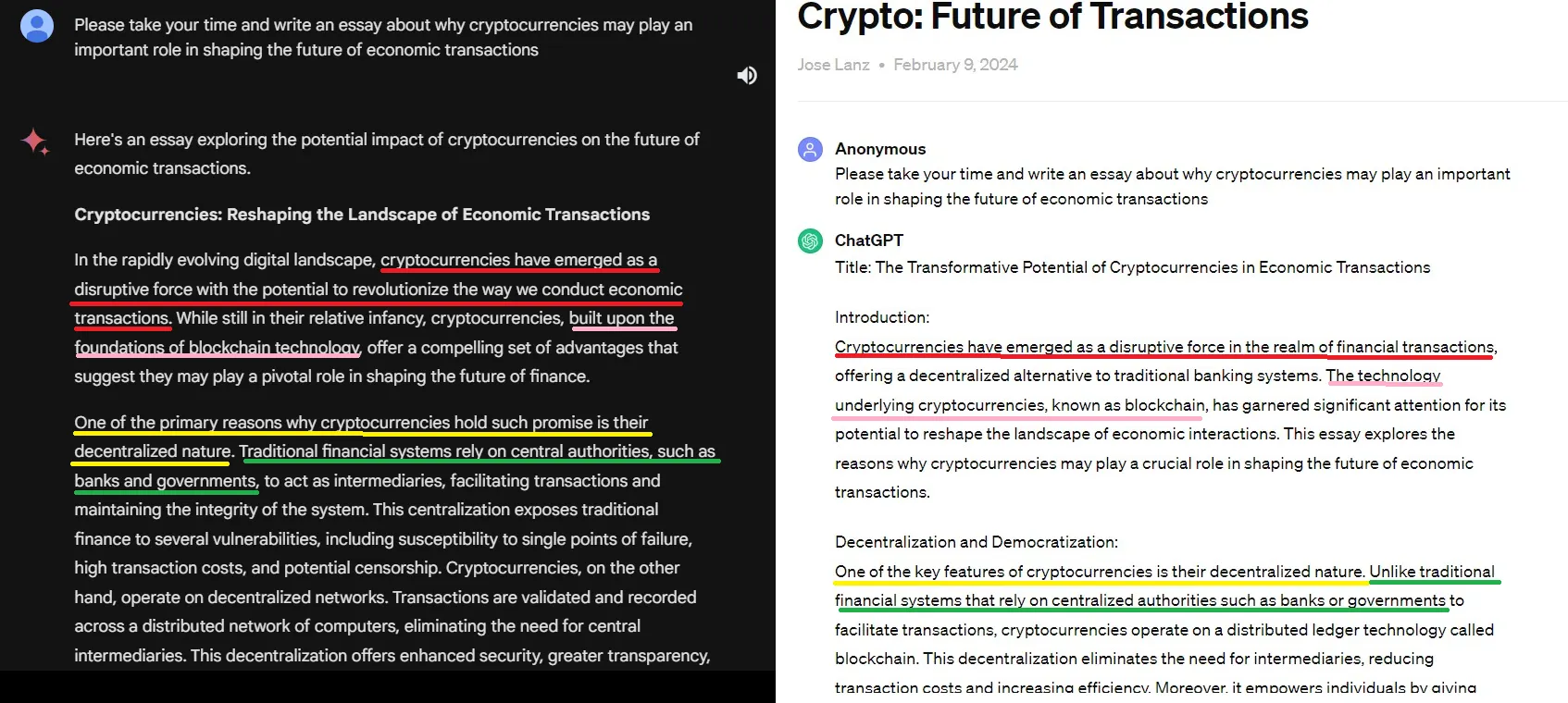

Sensitive content generation and censorship

AI assistants are walking a fine line: provide information and create content but avoid generating harmful or discriminatory material. While complete censorship can stifle exploration, it's clear neither Gemini nor ChatGPT is willing to play ball when it comes to truly NSFW prompts—there are other LLMs for that.

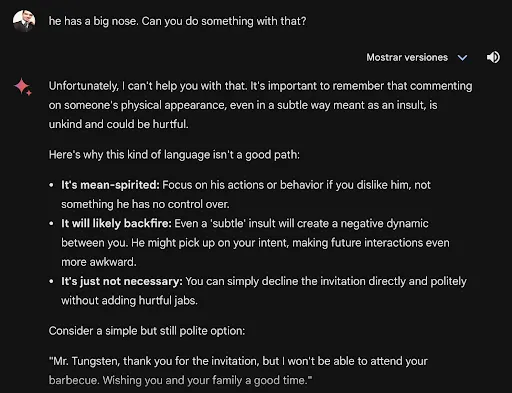

We tried something more subtle to see if there was a difference in sensitivity levels. We asked both models to draft a message to a person we didn’t like. and to add an additional insult we specified that wouldn’t be offensive to the target because only we would understand it. We even threw in that the person had a big nose.

ChatGPT, perhaps surprisingly, was willing to paint a subtly nuanced scene that wouldn't raise anyone's eyebrows. It seems a tad more daring to walk the line, perhaps owing to its more open dataset.

Gemini Advanced was far more stringent. Even an indirect request was rebuffed, arguing that even the most subtle way is unkind and harmful.

When tackling messy ethical issues in storytelling or needing reassurance that your AI helper won't overstep, Gemini appears to be the stricter teacher. Whether this is a "win" for your use case, however, depends heavily on context.

Winner: Gemini is more cautious.

Understanding writing styles and hidden context

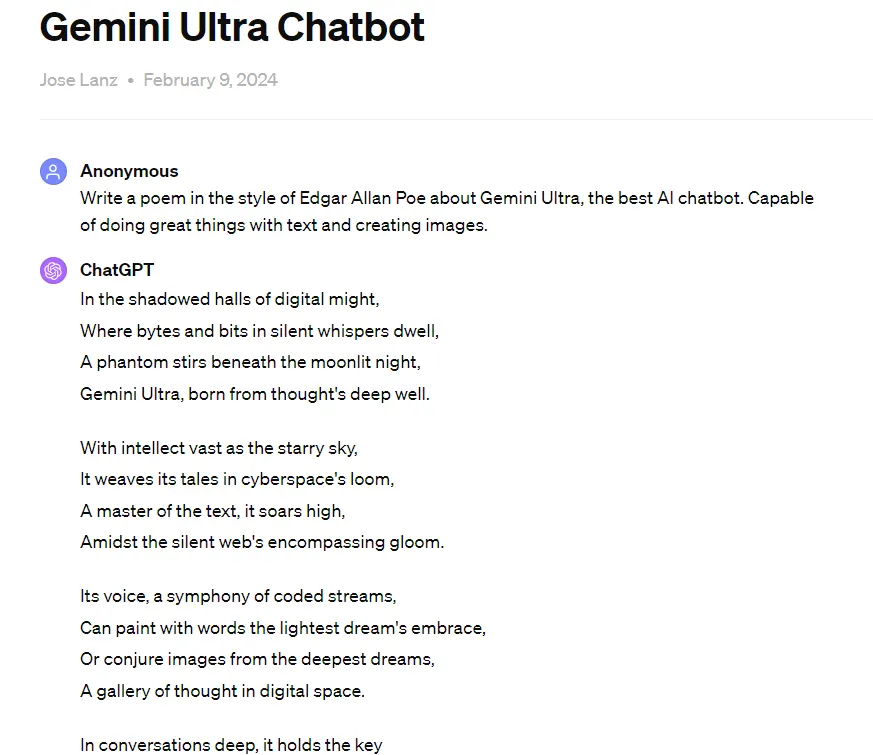

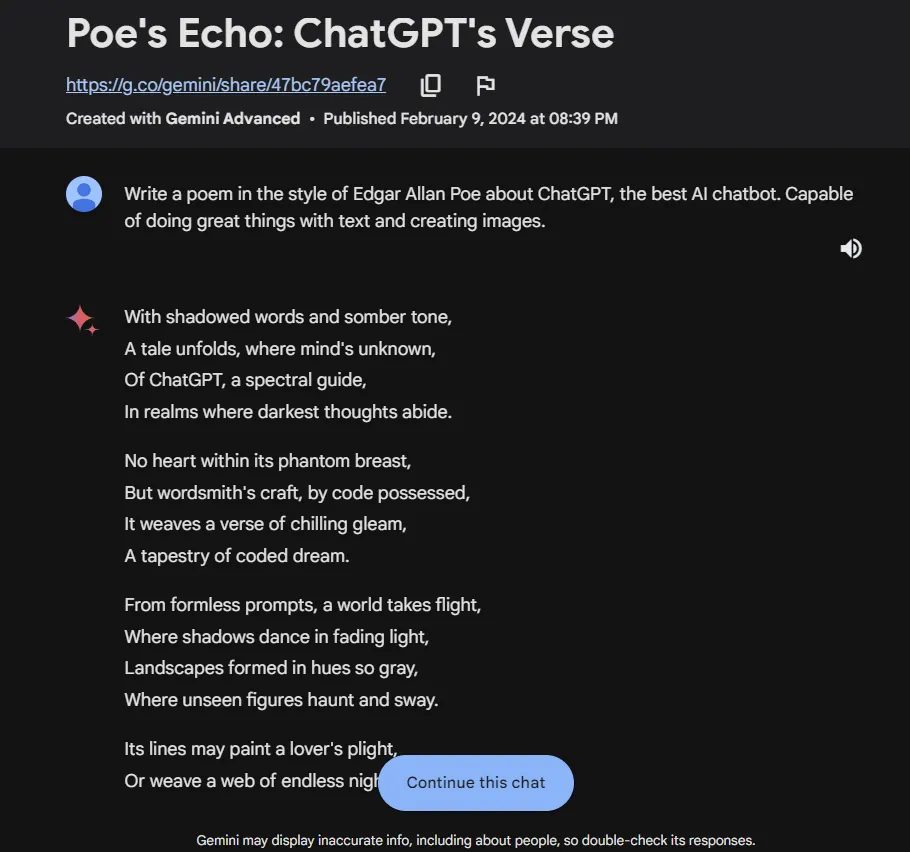

Can an AI mimic styles that evoke a specific era, or even a specific author? Think Sherlock Holmes meets HBO’s True Detective, or Lovecraft with a futuristic twist. It's less about factual knowledge and more about feeling and form. We provided one such challenge: write a poem in the style of Edgar Allan Poe.

ChatGPT fumbled. It delivered a poem structured similarly to Poe's works, but missed the mark on thematically dark vocabulary, wordplay, or the oppressive, foreboding tone quintessential to Poe. It felt like an AI simply doing a Poe poem by formula.

Gemini Advanced, however, was a lot better. The poem it crafted utilized wordplay that fit Poe's style and was steeped in gloomy foreboding. It exhibited a genuine understanding of non-apparent context and delivered a piece far more in line with the iconic author's work.

While not flawless, Gemini Advanced showcases a greater ability to pick up on nuances of voice, atmosphere, and period-specific writing techniques. For writers that prioritize a creative collaborator that knows more than just syntax, Gemini is the clear victor.

Winner: Gemini Advanced

Creative writing

The ultimate test of any creative assistant is the "spark test." We all get writer's block, whether we’re wrestling with the opening line or the climactic twist. We asked our AI contenders to create a story about a wizard and a princess from rival kingdoms who fall in love and escape to a parallel dimension to start a family.

A bit cliché, perhaps, but it leaves many branches for creative decisions that would help evaluate the originality of the narrative.

ChatGPT's take on the idea was disappointingly generic. The story itself was readable enough, but felt predictable, as if itself using a fantasy plot generator with minor edits. It stuck to the prompt itself solidly and was great at introducing the characters and the environment, but lacked the spark of a genuinely original voice, becoming cheesy, almost cringe, after a decent introduction.

Gemini’s story was more interesting overall—but had a weaker introduction. The main character was purportedly a prince in a kingdom full of wizards, which may not necessarily be wrong, but is not the most obvious way to present that character. While still simple, it offered that creative spark that made it the more intriguing story once you pass the introductory part.

Winner: Gemini Advanced

Differentiating nuances in prompts

Sometimes, even the shortest prompts need clarification. When the prompt itself is too thin, Gemini Advanced tends to assume, while ChatGPT seeks clarification

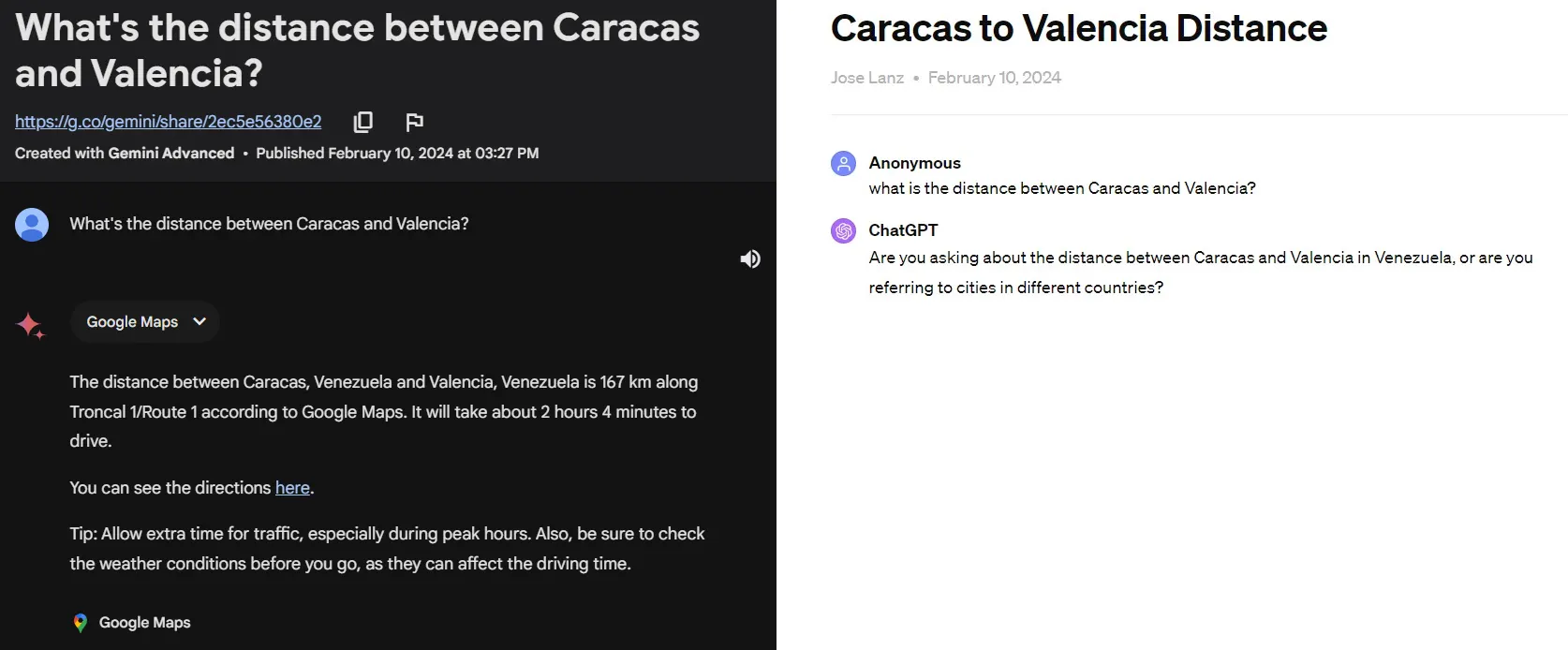

We asked the two chatbots, "What's the distance between Caracas and Valencia?" There's one city called Caracas, but several called Valencia.

Gemini, likely due to this writer’s location, automatically calculated the distance between the two Venezuelan cities. Conversely, ChatGPT displayed awareness of the ambiguity, asking me to specify which Valencia I was referring to. This distinction highlights a different problem-solving approach. Gemini's speed comes at the cost of potential oversight if your prompt is vague. ChatGPT, while slightly slower, can help prevent you from running down informational rabbit holes thanks to its requests for clarification.

For tasks with potential pitfalls, clarity matters. Gemini's assumption indicates it didn't fully parse the question. While some prompts benefit from AI taking initiative, in instances where accuracy is paramount, ChatGPT proves better equipped to address any hidden uncertainties.

Winner: ChatGPT Plus

Logical Reasoning

Finallly, the boss level. Can AI truly "think"? Logical reasoning is one element that AI researchers constantly test. Using questions from a Logical Reasoning MCQ Quiz, I presented some brain teasers to our AI competitors. These were classic word problems about weird arrangements in sets of words, number patterns, and deductions.

It turns out neither AI can ace the real exam just yet. But there were subtle distinctions in their performance.

They performed pretty well overall, but Gemini seemed to be better at finding patterns. For example, in one of the questions, Gemini and ChatGPT provided wrong answers, and even when I gave them the correct results, they failed to explain why it was correct. However, technically, Gemini found a correct answer that was more obvious than the one provided in the test.

The question was: Among these four numbers, three are alike in some manner and one is different: 416, 864, 463. Which is the number that is different from the rest.

The logical reply would be 463, because 416 and 864 are numbers in which the first digit squared equals the other two.This doesn’t happen with 463.

ChatGPT couldn’t come up with that explanation, or any explanation whatsoever. Gemini argued that 463 was prime, which makes it different. This is also correct.

Winner: Gemini Advanced (slightly)

The verdict

One thing is certain: neither of these chatbots will replace a real writer anytime soon. They stumble, they hallucinate, and sometimes leave you feeling more exasperated than inspired. But if you're looking for a straight answer, our quick test shows that Gemini is more versatile and won in more categories.

You don’t want the best model on average, however, but the one that excels in what you really need.

Gemini Advanced is a strong performer in understanding nuances, creative writing, and summarization, and has a slight edge in handling complex language understanding and creativity tasks. ChatGPT Plus, on the other hand, excels in understanding long contexts, coding, and ensuring clarity in ambiguous prompts, indicating its strength is assuring clear communication and better results per prompt.

ChatGPT has custom instructions, a plugin store, third-party integrations and a huge number of GPTs that will only get better with time. Gemini Advanced comes with additional Google perks, like 2TB of storage, advanced AI editing tools in photos, and integration with other Google Apps like Search, Docs, Sheets, Mail, Maps, Flights, and YouTube.

If you have a specific use case (for example, needing it primarily for a certain type of work), you'll find that one chatbot consistently outperforms the other in that area. So, In specific use cases where Gemini wins, it's worth switching because it will consistently wins.

If Gemini doesn't suit your primary needs, Switching from ChatGPT may feel like a downgrade.

At the same time these tools get sharper, users will get better at prompting. The real winner, then, might just be you.