The ability to create AI-generated deepfakes of famous and influential people has caused quite a storm on the internet, but while images of the Pope wearing a puffy Balenciaga jacket and former U.S. President Donald Trump being arrested have made headlines, a new trend is emerging: deepfakes of murder victims, according to a report by Rolling Stone.

“They seem designed to trigger strong emotional reactions, because it’s the surest-fire way to get clicks and likes,” Paul Bleakley, assistant professor in criminal justice at the University of New Haven, told Rolling Stone.“It’s uncomfortable to watch, but I think that might be the point.”

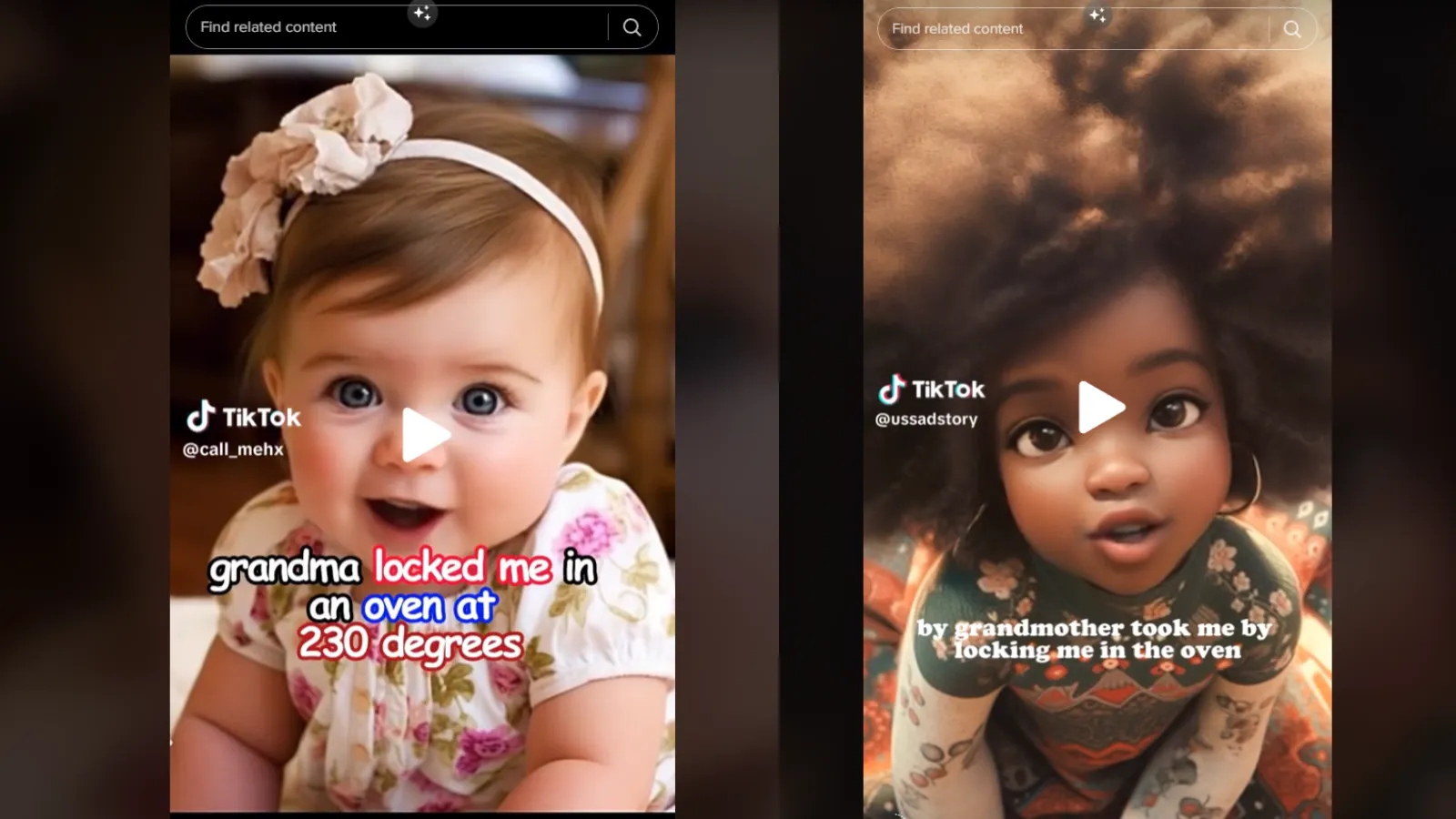

Short video clips making the rounds of TikTok include AI-generated video, audio, and first-person descriptions of children. Among the most known depicted in this way is Royalty Marie Floyd, presented in some videos as Rody Mary Floyd, whose body was found in an oven in 2018. Floyd's grandmother, Carolyn Jones, was charged with Floyd's murder, Rolling Stone said, adding that the AI generator also gave differing ages and races of the child.

The phenomenon that causes these errors is known as hallucinations. AI “hallucinations” refer to instances when an AI generates unexpected, untrue results and is not backed by real-world data. AI hallucinations can create false content, news, or information about people, events, or facts.

"These AI deepfakes of true crime victims certainly bring the story to life in a way that none of us could have imagined, which could help to educate the general public,” attorney and CEO of AR Media Andrew Rossow told Decrypt. “However, from a legal perspective, this utilization and application of AI is extremely troubling and problematic."

Rossow pointed to two main issues: privacy concerns and causing emotional distress to those who knew the victim.

A deepfake is an increasingly common type of video created with artificial intelligence that depicts false events. Already a concern of cybersecurity and law enforcement officials, deepfakes have become increasingly harder to detect thanks to fast-evolving generative AI platforms like Midjourney 5.1 and OpenAI's DALL-E 2.

Midjourney ended its free trial version in March following a rise in viral deepfakes generated using its technology.

The growing power of AI also has scammers using the technology to attempt to trick would-be investors out of their money and crypto. Last week, a deepfake video of Elon Musk promoting a crypto scam went viral.

As Rolling Stone reports, the account that uploaded the Royalty Marie Floyd video has been taken down by TikTok for "violating the platform’s policy regarding synthetic media depicting private individuals." Still, the genie is already out of the bottle, and accounts posting similar videos are filling the gap.

"Unfortunately, use cases like this have to happen in order for us to advance our legal jurisprudence and precedent on already heavily debated issues involving privacy (pre and post-mortem) and now emerging tech that in and of itself still operates primarily in the grey with our courts," Rossow said.