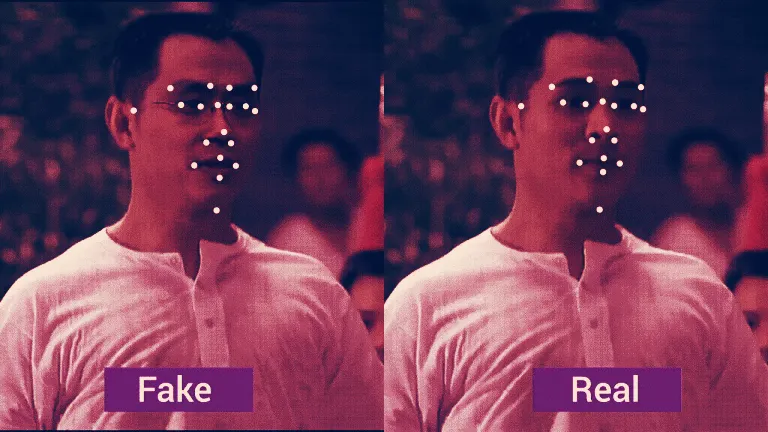

Late last month, a “deepfake” video surfaced on Twitter purporting to show the CEO of the popular crypto exchange Binance, Changpeng Zhao, playing the lead role in a Jet Li film. Zhao, who is not a martial arts expert, was taken aback: “This technology is scary… Video KYC and facial recognition will be out of the window soon,” he tweeted.

The clip, of course, was benign. The company responsible for it, blockchain firm Alethea AI, created it at a Binance-sponsored hackathon in San Francisco at the end of October to demonstrate to its audience the dangers of deepfakes. That is, meticulously faked, AI-generated video renderings of public figures whose potential for proliferating fake news have become a flashpoint in the ongoing culture war.

Concerns over the possible repercussions the technology could have for, say, international diplomacy—imagine, for instance, a deepfake video depicting Trump declaring war on Russia— have already spurred the likes of Facebook, DARPA, Microsoft and the University of Oxford to spend millions of dollars on finding a solution. A host of blockchain companies, like Alethea AI, are following suit, making use of the technology’s decentralized, immutable databases to help people spot faked videos.

Alethea works on solving deepfakes

Alethea, the originator of the Changpeng Zhao deepfake, is building artificial intelligence tools to spot deepfakes in the wild, which will eventually be filed on an immutable blockchain register.

The purpose of this register is to build a deep pool of video data that the AI can draw upon to aid its “detection” of deepfakes, said one Alethea staffer, who asked to remain anonymous because Alethea AI hasn’t officially launched yet. “In order to be efficient, a detection algorithm requires good data sets,” said the person. That means the company also creates its own deepfakes—like that clip of Changpeng Zhao. The database’s immutability ensures that it cannot be tampered with.

Alethea is concentrating on building up a data set of video content relating to mouth manipulation, which it said was harder for machines to spot. “Normally, if you shoot a gun, your face would crisp or it would have some type of expression,” said the Alethea staffer. “A deepfake doesn't really capture those expressions.”

Alethea’s also working on educating users about tech. Its gaming app, currently in closed beta, is designed to teach people about the underlying technology used to create deepfakes, and how to spot them online. Alethea says that, though the naked eye might not catch the most complicated deepfakes, produced by sophisticated actors (like nation states). Yet the staff member says there’s worth in keeping the general public vigilant about deepfakes, which are becoming easier and cheaper to produce, as well as more prevalent. “Maybe in 10 or 15 years, it will be completely irrelevant. But for now, I think it's super important that we take action,” says the team member.

Amber Video creates digital fingerprints

Whereas Alethea is focused on detection and education, Amber Video—an Ethereum-based platform overseen by DARPA’s former head of media forensics, Dr. David Doermann—is preoccupied with “fingerprinting,” the method of cryptographically hashing a video at source before logging on the blockchain. (Hashing represents data as an alphanumeric code that cannot be imitated or fabricated.)

“You can have confidence in the veracity of the video and [know] that nothing has been altered,” Amber Video CEO Shamir Allibhai told Decrypt. “If the fingerprints don't match, you know that something has been altered.”

Fingerprinting at source, said Allibhai, means that you can make sure videos are originals, even if you cut and splice them up. “Think about a news piece, say a five-minute news video; there might be 20 soundbites and 15 B-roll shots. [With Amber Video’s software], each one of those elements will maintain its fingerprint all the way through to distribution,” he said.

It’s not just the media who might make use of the technology. “Say there's also a police body camera that’s been on for one hour, and there's a shooting that lasted 15 minutes. Going to prosecution, the body camera becomes evidence. But because we're hashing across time—even when you just share 15 minutes—those 15 minutes maintain a cryptographic link to that overall one-hour recording. You don't have to trust anyone,” said Allibhai.

But the technology faces certain limitations. Most notably, “because those fingerprints are being submitted live as the video is being recorded,” Allibhai said the camera should be connected to the internet at all times. If not, the video will upload as soon as internet connection is restored– there will then be a discrepancy between the recorded timestamp and the timestamp on the blockchain.

Allibhai said this isn’t a huge issue. “Deepfake technology is not [at a level that] it can create really believable fakes in seconds,” he said.

In any case, Amber Video plans to sell its technology to large companies and law enforcement, whose security cameras are likely hooked up to the internet, and who often have multiple stakeholders to resolve disputes. But home-made footage, such as the videos of police shootings that so often spread virally online, might be trickier to upload without a stable connection.

Axon, a company that produces body cameras for law enforcement, tells Decrypt it’s “researching blockchain,” but not deploying it. Axon is convinced its tech stands on its own, without blockchain. Video is hashed according to cryptographic standards set out by the US government, and neither videos nor metadata can be edited. “Subsequent video snippets, redactions, or shared copies are all derivatives of the original. The original video is never changed,” says its press team.

That’ll stop Deepfake Trump declaring war on Deepfake Putin. Hopefully.