The day after OpenAI's much-hyped announcement of GPT-4o, its improved “omnimodal” large language model, Google fired back with a barrage of upgrades to its Gemini AI offerings, flexing its technological prowess, leaning into its live search advantages, and solidifying its standing against mindshare leader ChatGPT.

Building upon its strengths, Google is infusing generative AI into its search experience, enabling users to interact naturally with its search engine rather than relying on keyword-based queries. The keynote included a demonstration of a Google search query about removing a coffee stain. Instead of merely displaying links to webpages with instructions, the search engine immediately provided a comprehensive answer generated by AI.

These AI-generated results, designed to address user queries directly and efficiently, will be displayed above search results.

Throughout the presentation, Google made clear that its dominance in web search translated into a key advantage for its AI initiatives, showing off how various features could tap into current information rather than relying on a dated snapshot like other large-language models (LLM).

One of the standout features announced is ”Ask Photos,” which allows users to have natural conversations with Gemini to search for information in their gallery. While Google Photos has long allowed people to search their image library for specific people, objects, or words, the AI-infused update supports open-ended, natural-language queries.

For example, a Google user asked Gemini what his car’s license plate number was. Gemini scoured through all of his photos, evaluated them, and provided the correct answer.

Another upgrade would be familiar to users of a litany of AI meeting assistants, including those built into online conference platforms like Zoom. In Google Meet, Gemini can now analyze meetings, summarize them, and generate responses to questions in the chat. After a meeting, Gemini provides a list of action items and task assignments.

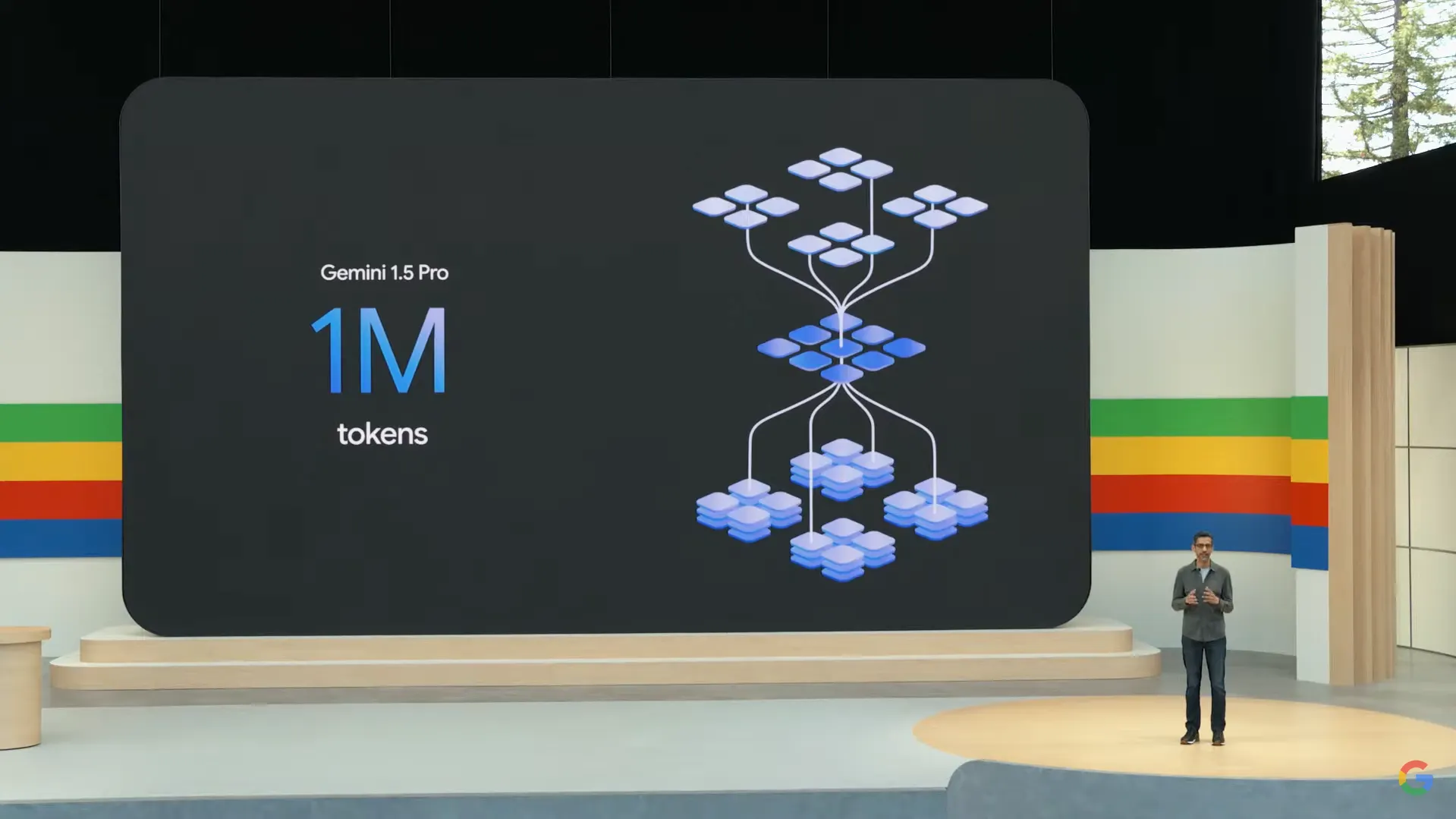

The biggest news involved upgrades under the hood. Google today announced the release of Gemini 1.5 Pro, boasting a staggering context window of 1 million multimodal tokens. That capacity dwarfs GPT-4's 128,000-token limit and is already available for both developers and consumers in Gemini Advanced—the tech giant’s paid AI services tier.

Google says it plans to expand its token handling capacity even further later this year, potentially reaching up to 2 million tokens for developers and a tenfold increase over that of GPT-4o.

Thanks to its massively increased capacity, Google also showed off Gemini’s impressive retrieval capabilities. This is a key feature, because until now, powerful LLMs like Claude or GPT-4 show a performance degradation—“forgetting” information previously discussed—when prompted with huge amounts of data.

Besides its top of the line models, Google launched Gemini 1.5 Flash, a compact multimodal LLM designed to compete against Claude 3 Haiku and GPT-3.5 in providing quick answers. However, its 1 million token handling capacity positions it as the most powerful "light" model available to date.

Probably the most interesting announcement was Google’s Project Astra, a universal AI agent that can be personalized and tailored to each user's needs. Google pointed out that the Astra presentation was recorded in real-time, likely in response to OpenAI’s live GPT-4o demo yesterday. The interaction seemed more capable and less clunky than GPT-4o, albeit with more concrete and less human-like responses.

Here's a demo of the new Project Astra assistant! Pretty cool to see it on smart glasses too. Some of these agent experiences will come to the Gemini app later this year. pic.twitter.com/hGk6bbIzUD

— Mishaal Rahman (@MishaalRahman) May 14, 2024

While Gemini's voice is also broadly natural, it lacks the emotional—or even “flirty”—quality of OpenAI's new ChatGPT voice. Google’s priority appears to be functionality, versus OpenAI’s emphasis on more human-like interactions.

Going beyond traditional language models, Google introduced cross-platform customizable AI agents that it says are capable of reasoning, planning, and memorizing. These abilities allow Gemini to behave like a group of specialized AIs working together.

These API-based connections, which Google described as "Gems," seem to be a response to OpenAI’s customizable GPTs. Gems integrate seamlessly with Google's ecosystem, offering features like real-time language translation, contextual search, and personalized recommendations. Users can shape Gems to focus on specific tasks or topic areas, or use a specific tone.

Google also announced new generative AI models for images, videos, and music. Imagen 3, Google's new image generator, provides highly realistic and detailed images, contrasting with OpenAI's cartoonish look. They also claim it excels at generating text, a feature OpenAI also claims to have improved.

They also launched an upgraded version of MusicLM for generative music enthusiasts.

The icing on the cake was Veo, a Generative Video model, announced ahead of the release of OpenAI’s much touted but yet unreleased Sora video tool. The unedited raw output suggests a quality level comparable to the forthcoming OpenAI entry. Google says it will make Veo available in a few weeks—a timeline that could beat Sora to market.

Introducing Veo: our most capable generative video model. 🎥

It can create high-quality, 1080p clips that can go beyond 60 seconds.

From photorealism to surrealism and animation, it can tackle a range of cinematic styles. 🧵 #GoogleIO pic.twitter.com/6zEuYRAHpH

— Google DeepMind (@GoogleDeepMind) May 14, 2024

Toward the end of its two-hour-plus keynote, Google also showed some love to the open-source community, unveiling Pali Gemma, an open-source vision model. The company also promised to launch Gemma 2—the next iteration of its open-source large language model—in June. The new model will have an extended token context window and will be more powerful and accurate.

Finally, Google announced that it was first releasing its suite of Gemini-powered features on its Android mobile operating system. It follows OpenAI’s apparent favoritism for Apple’s MacOS and iOS platforms, where it was releasing its latest updates before doing so on Windows, created by top investor Microsoft.