Artificial intelligence enthusiasts prefer working with open-source tools over proprietary commercial ones, according to an ongoing survey of more than 100,000 respondents.

The emergence of Mistral AI's Mixtral 8x7B, an open-source model, has made a significant impact in the AI space. Light and powerful, Decrypt named it among the Best LLMs of 2023. Mixtral has gained a lot of attention for its remarkable performance in various benchmark tests, especially Chatbot Arena, which offers a unique human-centric approach to evaluating LLMs.

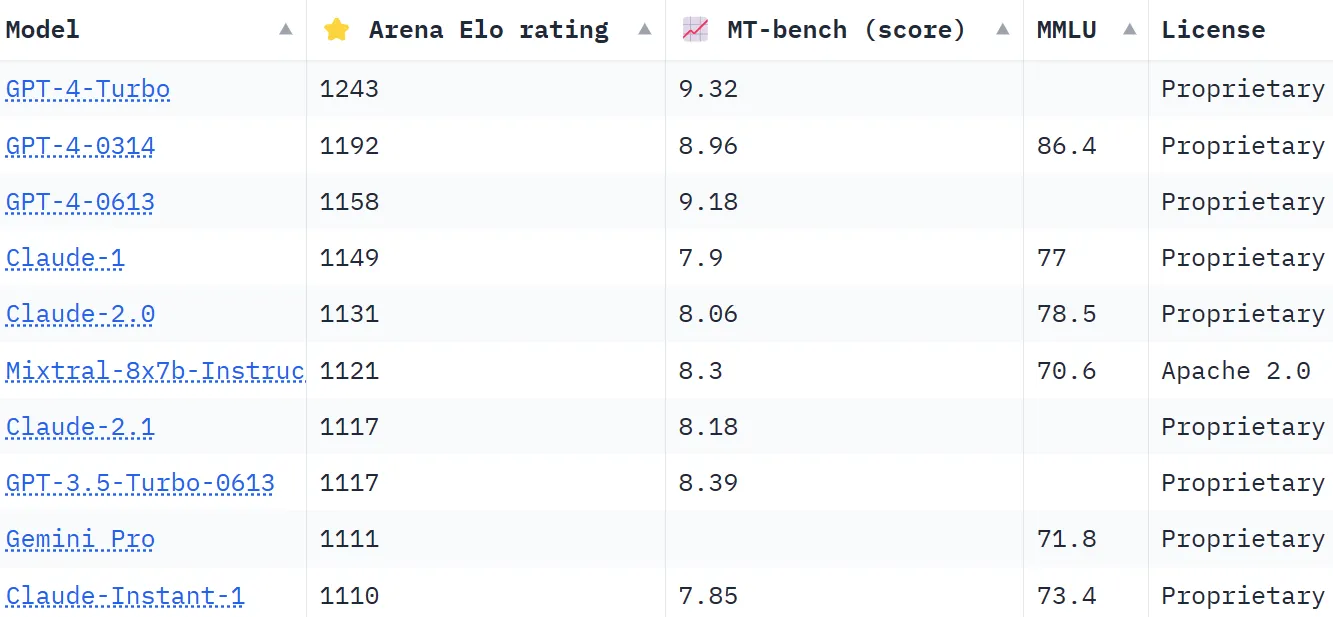

The Chatbot Arena rankings, a crowdsourced list, leverage over 130,000 user votes to compute Elo ratings for AI models. Compared to other methods that try to standardize results to be more objective, the arena opts for a more "human" approach, asking people to choose between two replies provided by unidentified LLMS blindly. These responses may appear unconventional by certain standards, but can be intuitively evaluated by actual human users.

Mixtral has an impressive standing, surpassing industry giants like Anthropíc's Claude 2.1, OpenAI's GPT-3.5, which powers the free version of ChatGPT and Google’s Gemini, a multimodal LLM which was sold as the most powerful chatbot to challenge GPT-4’s dominance.

One of Mixtral's notable differentiators is being the only open-source LLM in the Chatbot Arena top 10. This distinction is not just a matter of ranking; it represents a significant shift in the AI industry towards more accessible and community-driven models. As reported by Decrypt, Mistral AI said its model "outperforms LlaMA 2 70B on most benchmarks with 6x faster inference and matches or outperforms GPT 3.5 on most standard benchmarks." like MMLU, Arc-C or GSM.

The secret behind Mixtral's success lies in its 'Mixture of Experts' (MoE) architecture. This technique employs multiple virtual expert models, each specializing in a distinct topic or field. When faced with a problem, Mixtral selects the most relevant experts from its pool, leading to more accurate and efficient outputs.

“At every layer, for every token, a router network chooses two of these groups (the ‘experts’) to process the token and combine their output additively,” Mistral explained in the LLM’s recently published paper. “This technique increases the number of parameters of a model while controlling cost and latency, as the model only uses a fraction of the total set of parameters per token.”

Furthermore, Mixtral stands out for its multilingual proficiency. The model excels in languages such as French, German, Spanish, Italian, and English, showcasing its versatility and wide-reaching potential. Its open-source nature, under the Apache 2.0 license, allows developers to freely explore, modify, and enhance the model, fostering a collaborative and innovative environment.

The success of Mixtral is clearly not just about technological prowess; it marks a small but important victory for the open-source AI community. Perhaps, in a not-so-distant future, the question won't be about which model came first, or which one has more parameters or context capabilities but which one truly resonates with the people.

Edited by Ryan Ozawa.