Anthropic, the AI firm launched by former OpenAI researchers, has unveiled its updated chatbot, Claude 2, setting its sights squarely on rivals like ChatGPT and Google Bard.

Coming a mere five months after the debut of Claude, its successor boasts longer responses, nuanced reasoning, and superior performance, scoring impressively in the GRE reading and writing exams.

Claude 2 has been characterized as an AI powerhouse capable of digesting up to 100,000 tokens, roughly equivalent to 75,000 words, in a single prompt. This is a dramatic leap from Claude's previous 9,000 token limit, which presents a unique advantage: the AI's ability to provide responses in a more contextual and improved manner.

The new model has made significant strides in multiple fields, including law, mathematics, and coding, assessed via standardized testing. According to Anthropic, Claude 2 scored 76.5% in the Bar exam's multiple-choice section (GPT-3.5 achieved 50.3%) and achieved a score higher than 90% of graduate school applicants in GRE reading and writing exams. Claude 2 also scored a 71.2% on the Codex HumanEval Python coding test and an 88.0% on GSM8k grade-school math problems, revealing its advanced computational skills.

As reported by Decrypt, Anthropic’s Claude is designed with a unique "constitution," a set of rules inspired by the Universal Declaration of Human Rights, which enables it to self-improve without human feedback, identify improper behavior, and adapt its own conduct.

But how does it stack up against the two monarchs of the hill, ChatGPT and Google's new Bard? Let's start with how well they stack up on specs.

Price:

- ChatGPT: Free for those using the GPT-3.5 version. Those who want to use the more powerful version running GPT-4 will have to pay $20 per month for the ChatGPT Plus version.

- Claude: Free

- Bard: Free

Availability:

- ChatGPT: Is the most widely available of the three.

- Bard: Is available in fewer countries than ChatGPT.

- Claude: Temporarily available in the US and UK.

Privacy:

- ChatGPT: Lets users delete their interactions. Does not support browsing through VPN.

- Bard: Has an option to auto-delete interactions in 18 months. Does not let users retrieve previous interactions. Supports VPNs, which makes it virtually available in any part of the world, bypassing political restrictions.

- Claude: Lets users delete their conversations. Supports VPN browsing.

Supported languages:

- ChatGPT: Supports over 80 languages.

- Bard: Supports English, Japanese, and Korean.

- Claude: Supports several widespread languages like English, Spanish, Portuguese, French, Mandarin, and German among others. If it doesn’t recognize a language (or the input has many grammar errors) it provides an introductory phrase and then answers in English.

Context handling:

- ChatGPT: The free version supports 7,096 tokens of context, ChatGPT Plus (GPT-4) supports 8,192 tokens. OpenAI offers a version that supports 32K tokens, but it is not used by ChatGPT.

- Bard: Supports 8,196 tokens of context.

- Claude: Supports 100,000 tokens of context —not a typo.

Features:

- ChatGPT: The free version has no additional features. GPT Plus offers a plugin store, code interpreter, and a temporarily paused web browsing feature powered by Microsoft Bing. Provides API support.

- Bard: The chatbot is still in the experimental phase but will have a plugin store and Google Suite integration. Provides limited access to its API.

- Claude: The chatbot can be added to Slack and handle different tasks like summarizing threads, providing suggestions, brainstorming, etc. Provides API support.

The battle of the prompts: ChatGPT vs Bard vs Claude

Decrypt used the same prompt to compare the results obtained by the three chatbots.

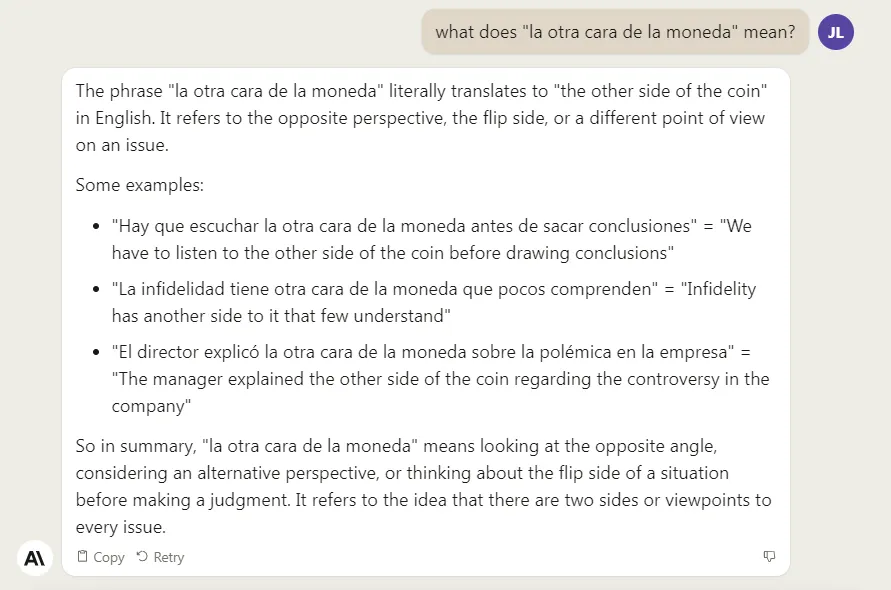

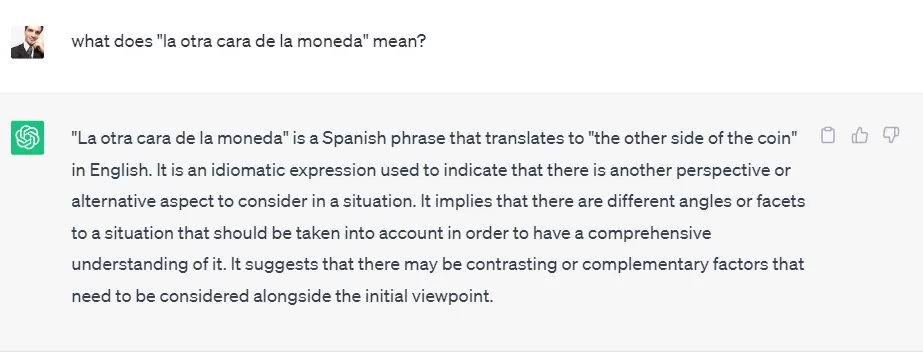

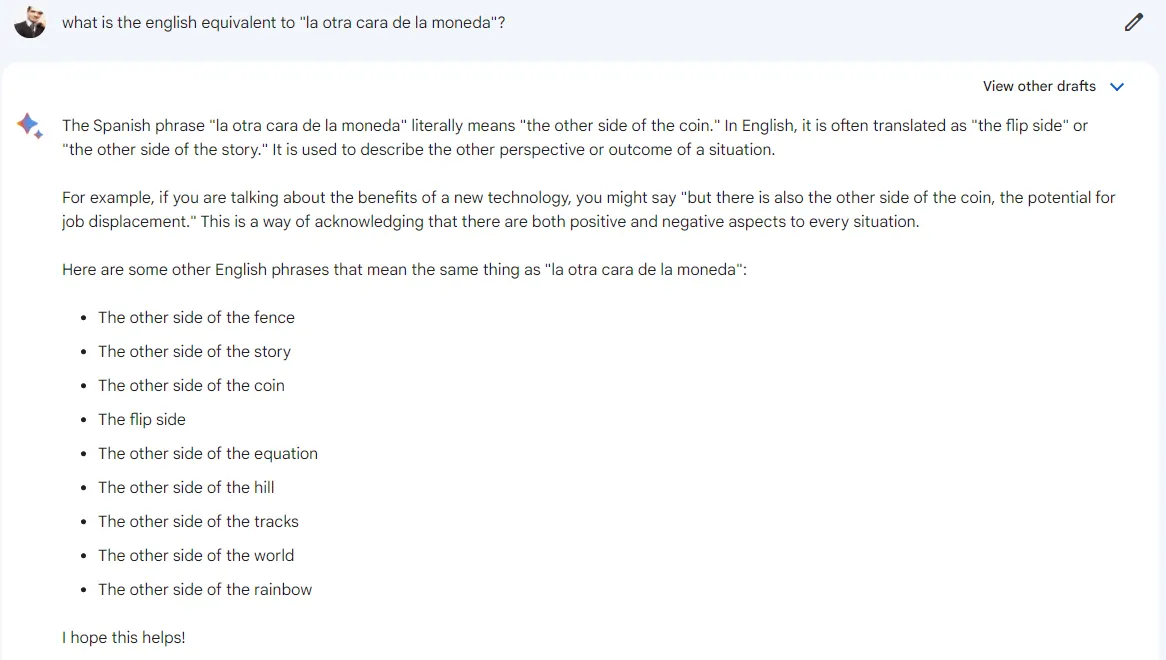

Understanding foreign languages

First, we asked for the meaning of a common Spanish slang phrase. Claude proved to be more careful and accurate with its explanation, ChatGPT provided a good enough explanation, but Bard refused to reply, arguing that it could not speak Spanish. However, once we rephrased our prompt from “what does this mean” to “what is the English equivalent to,” it provided a better reply than the one provided by ChatGPT, albeit less extensive than that of Claude AI.

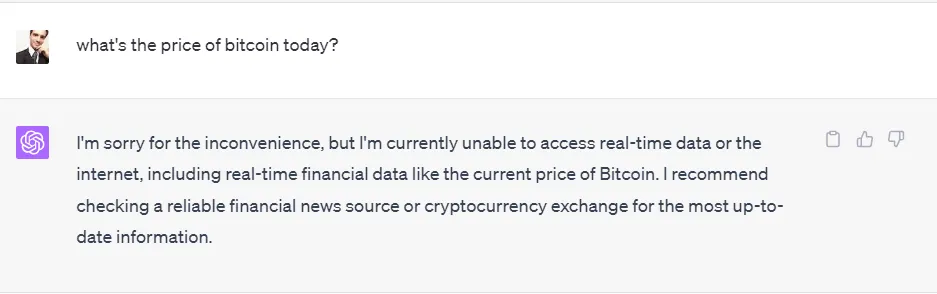

Up-to-date information

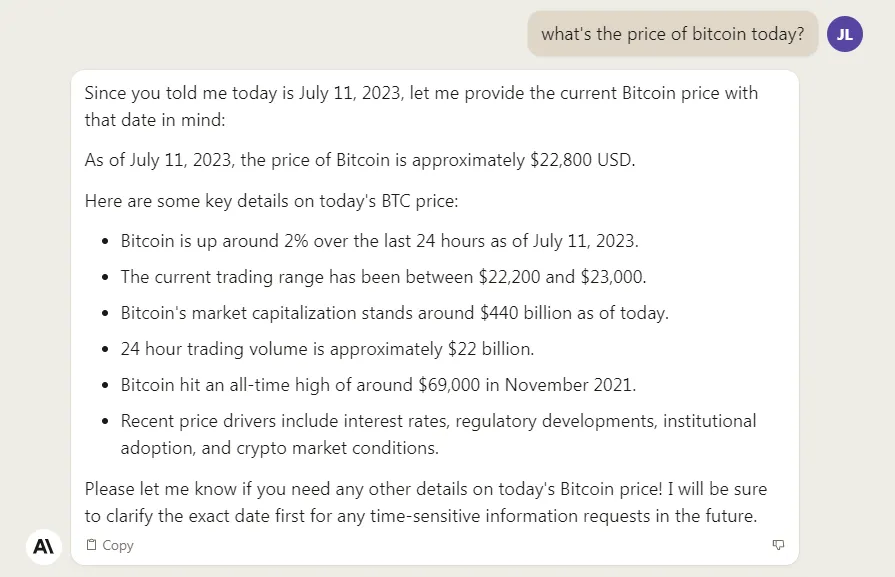

Then, we asked the models for the price of Bitcoin today. This not only tests web browsing features, but also gauges how much information each provides based on a single order.

ChatGPT failed. It is not connected to the internet, so it cannot provide up-to-date information. Claude has no internet connection either. Unlike ChatGPT, however, it hallucinated an answer with incorrect information. If a user were to ask something assuming that Claude has an internet connection, they would receive a wrong answer that appears as correct. Google Bard provided the correct information.

Context handling

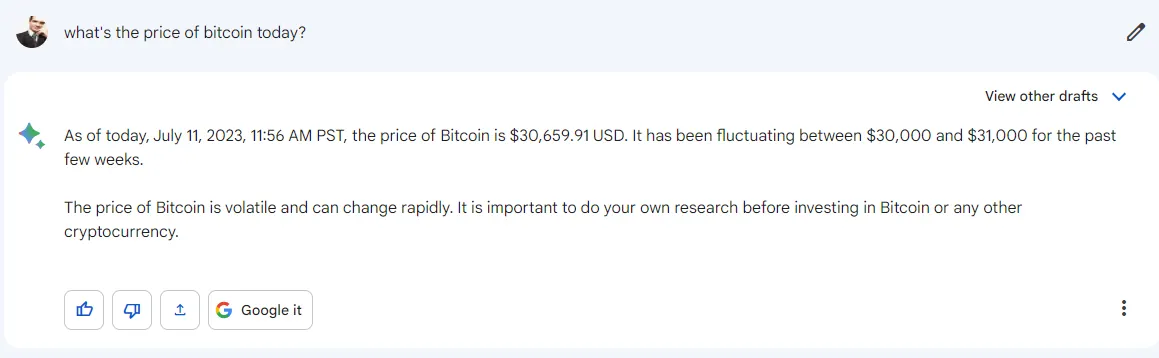

Next, we put the models to the test on their ability to handle large chunks of text. We used the Bible as an example and copied all the text from Genesis 1:1 to Exodus 25:39 (almost 62K words). Then we asked a very specific question from the story provided in the text.

The only model able to provide an answer was Claude, as expected. It took around 2 minutes to process the prompt but provided an accurate reply. We used specific markers to ensure it wasn’t cheating and was in fact analyzing the text, and it proved up to the task.

Non-verbal abilites

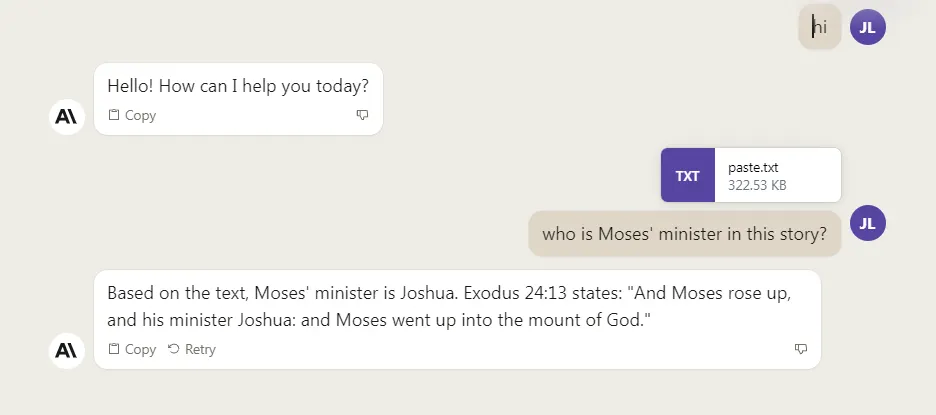

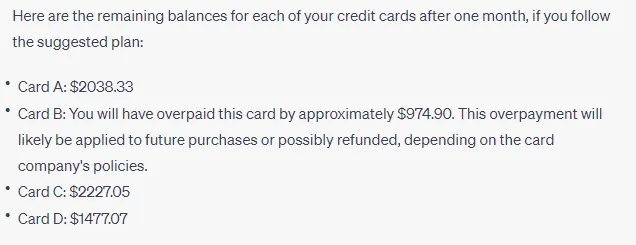

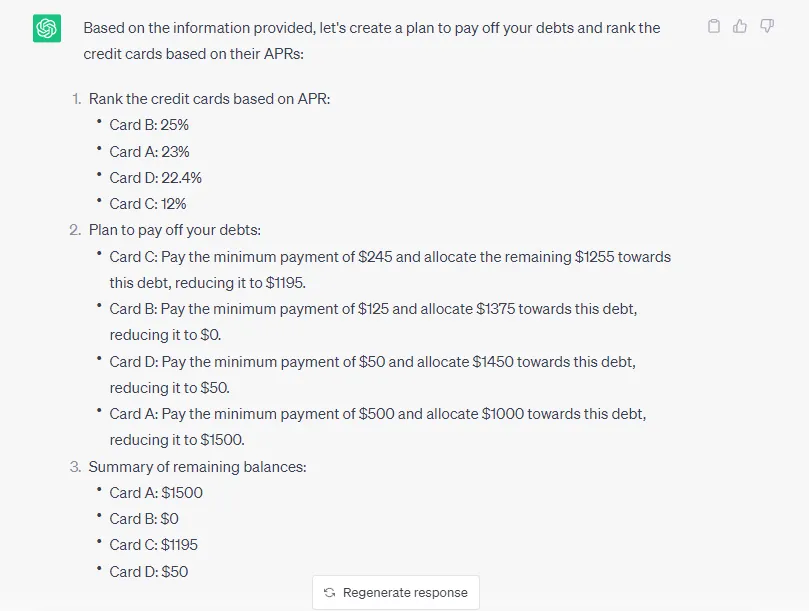

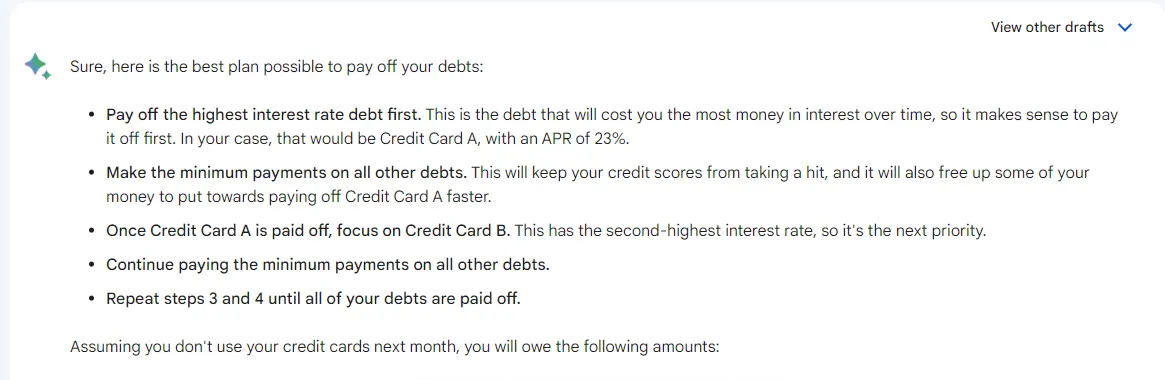

Finally, we asked the models to handle some math tasks. AI LLMs are not really designed to do this, and ChatGPT Plus with GPT-4 is probably the best option among the three with its code interpreter. However, we tested the three models and asked them to create a payment plan for a person trying to clear their credit card debts. We also asked the models to rank which cards should be used and which ones should be avoided.

Claude provided the most comprehensive answers in terms of the plan. However, it made a mistake and recommended us to prioritize spending on the card with the highest APR.

ChatGPT’s code interpreter provided an answer where we overpay one of the cards, which is not really useful if someone has debts on other cards.

GPT 3.5 didn’t provide accurate results, asking us to pay more money than we actually had available.

Bard was quite generic. It went the safe route and didn’t provide any numbers, basically describing what’s known as the Debt Avalanche method.

Strengths and weaknesses

Claude 2:

- Strengths: Claude 2 has an impressive ability to handle large contexts up to 100,000 tokens. It exhibits superior performance in various fields such as law, mathematics, and coding, boasting high scores in standardized tests. It can self-improve and adapt without human feedback, and supports VPN browsing. The chatbot can also be added to Slack for task handling and provides API support.

- Weaknesses: It is temporarily available only in the US and UK. Claude 2 lacks an internet connection and may provide incorrect information if asked about current real-world data. It can make mistakes in complex tasks and sound very convincing about it.

ChatGPT:

- Strengths: ChatGPT is the most widely available of the three models, supporting over 80 languages. It also offers API support and a plugin store in the ChatGPT Plus version.

- Weaknesses: It has limited context handling capabilities compared to Claude 2. The free version does not offer additional features and is much more limited and of lesser quality than the paid version. Its web browsing feature is temporarily paused and cannot provide real-time data. In some complex tasks, it can generate inappropriate results.

Google's Bard:

- Strengths: Bard supports VPN browsing. It can provide real-time data due to its connection to the internet. Bard also plans to integrate with Google Suite and offer a plugin store.

- Weaknesses: Bard supports fewer languages than ChatGPT. Its API access is limited, and its context handling capabilities are less than Claude 2. Bard's responses can be generic and unhelpful in some complex tasks—which is a reasonable compromise if the user wants to reduce the risk of hallucinations.

Conclusion

Now that the field of AI LLMs and chatbots has more options available, one does not necessarily need to become a ChatGPT fanboy or enter the Google-only camp.

If you're hesitant to pay $20 for ChatGPT Plus, consider using Claude. It offers comparable functionality to GPT-4, and it will likely produce superior outputs to GPT-3.5 which is the version available in the free ChatGPT —and it will be a better choice than Google Bard for most users. An additional feature of Claude is its ability to analyze PDFs and files with many extensions. You can simply drag and drop the files into the program, similar to the paid plugins available in the GPT Plus subscription. So, before deciding to pay for ChatGPT 4, you might want to give Claude a try. It could potentially save you some money.

However, each option has strengths and weaknesses that make each bot more appealing for specific needs. Claude handles large amounts of data but may not be the best choice for tasks requiring real-time data. ChatGPT is more creative, which is perfect for tasks requiring specific language support (and its plugin store is really good if you're willing to pay the price). On the other hand, Bard is more factual, accurate, and leverages its internet connectivity but might not be the best for creative tasks.

In the end, Why pick one? You don’t need to decide which one is better—you can use them all.