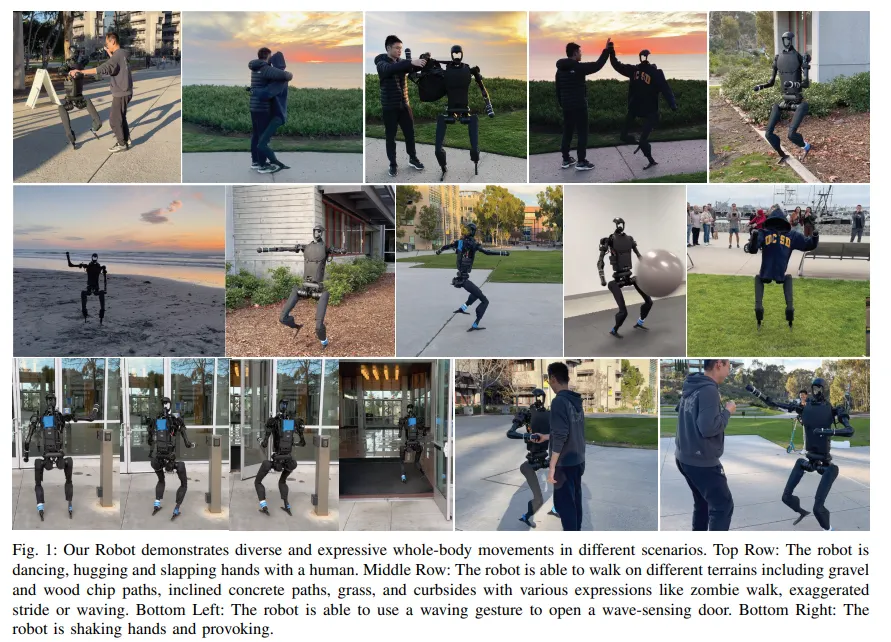

Rapid advancements in robotics can make the technology seem “terrifying,” so a team of engineers at the University of California San Diego is teaching a humanoid robot to perform expressive dance moves—both to improve mobility and agility, and to make them more welcome in human environments.

The team, led by Xiaolong Wang, a professor in the Department of Electrical and Computer Engineering at the UC San Diego Jacobs School of Engineering, used videos of people dancing and motion capture technology to train the robot's upper body.

Beyond mobility improvements, the idea was to make robots look more natural.

"We aim to build trust and showcase the potential for robots to coexist in harmony with humans," Wang said in a report published by the university. "We are working to help reshape public perceptions of robots as friendly and collaborative rather than terrifying like The Terminator."

The research aims to enhance human-robot interactions, with potential implications for diverse settings including factory assembly lines, hospitals, homes, and hazardous environments like laboratories and disaster sites.

As explained in the project’s research paper, the training process involved a two-pronged approach. The robot's upper body was trained to perform expressive movements using motion capture data and dance videos, while the lower body was simply focused on stability and balance.

“Our key idea is to not mimic exactly the same as the reference motion,” the research paper explains. “During training... we encourage the upper body of the humanoid robot to imitate diverse human motions for expressiveness, while relaxing the motion imitation term for its two legs.”

This method allowed the robot to replicate various reference motions, such as dancing, waving, high-fiving, and hugging, while its legs were able to keep balance and maintain equilibrium during those movements.

Despite the separate training of the upper and lower body, the robot applies its knowledge to control its entire structure as a single unit. This approach, which the researchers named “Expressive Whole-Body Control,” made it possible for the robot to move steadily on surfaces like gravel, dirt, wood chips, grass, and inclined concrete paths even while performing different gestures with its upper body.

The team used this approach to make the robot capable of adapting to different conditions—even ones that were not on the training dataset.

“We train our policy in highly randomized challenging terrains in simulation,” the researchers said. “This not only allows robust sim2real transfer but also learns a policy that does not just ‘repeat’ the given motion.”

The potential benefits of this research could be far-reaching. In settings like hospitals and homes, robots with enhanced expressiveness and agility could provide assistance and companionship, potentially improving the quality of life for patients and the elderly. In hazardous environments, these robots could replace humans in dangerous tasks, improving safety.

This project is not isolated in its pursuit of improved robot mobility. At Rice University, scientists have created an interactive program called “Bayesian Learning in the Dark” (BLIND) to aid complex robots in motion planning within environments filled with obstacles. Meanwhile, researchers have developed a four-legged robot capable of running on sand faster than humans can jog on solid ground, demonstrating the potential for robots to maintain stability and efficiency on mixed terrain.

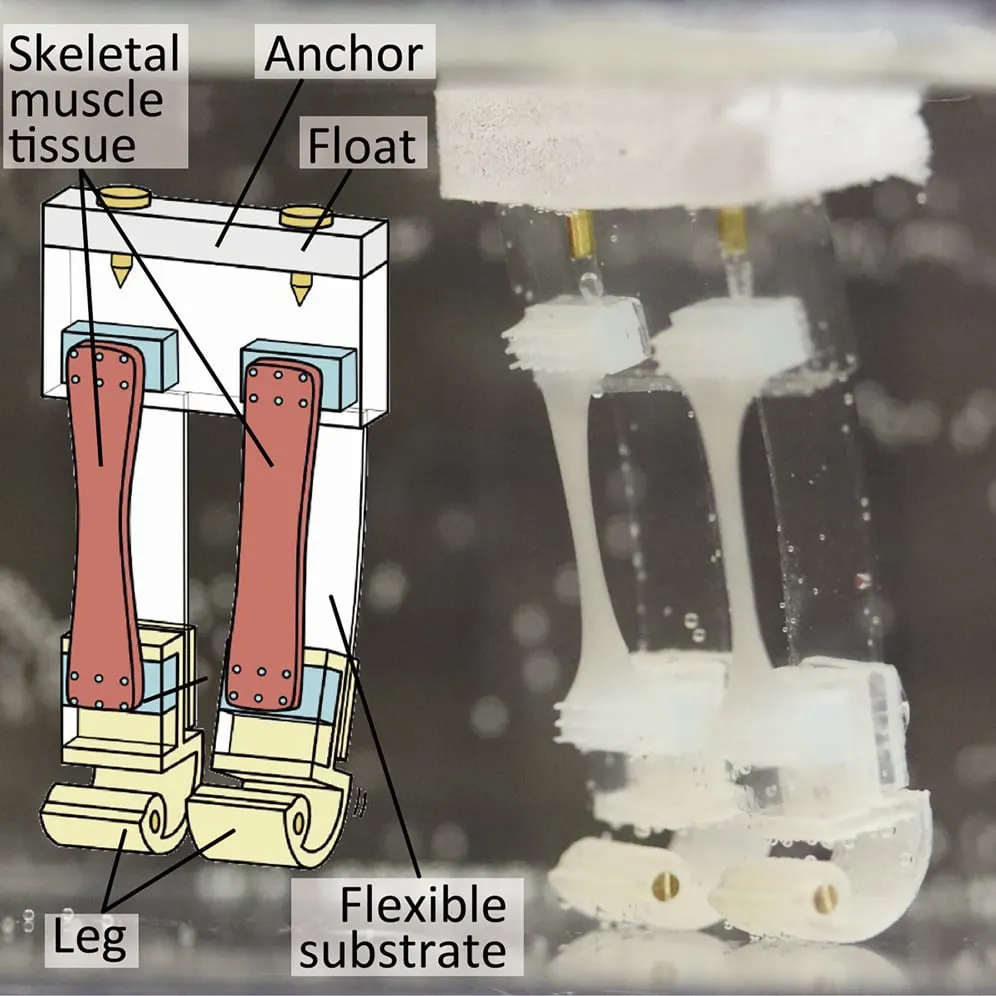

Yet another team of researchers recently developed a robot design that mimics skeletal muscle tissue to build more capable bipedal robots.

Looking to the future, Wang and his team aim to refine the robot's design to perform more intricate and fine-grained tasks. They plan to equip robots with cameras for autonomous operation and greater adaptability to different environments.

"By extending the capabilities of the upper body, we can expand the range of motions and gestures the robot can perform," said Wang.

Edited by Ryan Ozawa.