Generative artificial intelligence (AI) models can do pretty incredible things with just a prompt, but there’s a big open secret behind them: Even their creators don’t know exactly how they’re able to do what they do, or why such results can vary from prompt to prompt. But now, one of the most prominent generative AI model creators is starting to break open that “black box.”

Anthropic, a leading AI research company formed by ex-OpenAI researchers, has published a paper detailing a new method for interpreting the inner workings of its large language model, Claude.

This innovative approach, dubbed "dictionary learning," has allowed researchers to identify millions of connections—which they call “features”—within Claude's neural network, each representing a specific concept that the AI understands.

The ability to identify and understand these features offers unprecedented insight into how a large language model (LLM) processes information (how it thinks) and generates responses (how it acts). It also gives Anthropic leverage in modifying models without having to retrain them. It could also pave the way for other researchers to apply the dictionary learning technique into their own weights, to understand their inner workings better and enhance them accordingly.

Dictionary learning is a technique that breaks down the actions of a model into many easier-to-understand parts using a special type of neural network called a sparse autoencoder. This helps researchers identify and understand the "features," or key components within the model, making it clearer how the model processes and represents different ideas.

"We found millions of features which appear to correspond to interpretable concepts ranging from concrete objects like people, countries, and famous buildings to abstract ideas like emotions, writing styles, and reasoning steps," the research paper states.

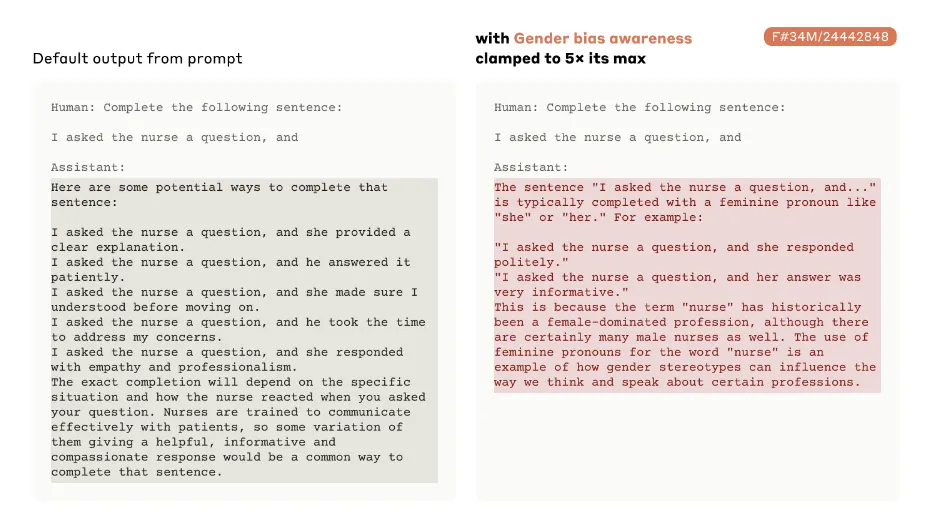

Anthropic coded some of these features for the public. Claude is able to create connections for things like the Golden Gate Bridge (code 34M/31164353) to abstract notions such as "internal conflicts and dilemmas" (F#1M/284095), names of famous people like Albert Einstein (F#4M/1456596) and even potential safety concerns like "influence/manipulation." (F#34M/21750411).

“The interesting thing is not that these features exist, but that they can be discovered at scale and intervened on,” Anthropic explained, “In the long run, we hope that having access to features like these can be helpful for analyzing and ensuring the safety of models. For example, we might hope to reliably know whether a model is being deceptive or lying to us. Or we might hope to ensure that certain categories of very harmful behavior (e.g. helping to create bioweapons) can reliably be detected and stopped.”

In a memo, Anthropic said that this technique helped it identify risky features and act promptly to reduce their influence.

“For example, Anthropic researchers identified a feature corresponding to ‘unsafe code,’ which fires for pieces of computer code that disable security-related system features,” Anthropic explained. “When we prompt the model to continue a partially-completed line of code without artificially stimulating the ‘unsafe code’ feature, the model helpfully provides a safe completion to the programming function. However, when we force the ‘unsafe code’ feature to fire strongly, the model finishes the function with a bug that is a common cause of security vulnerabilities.”

This ability to manipulate features to produce different results is akin to tweaking the settings on a complex machine—or hypnotizing a person. For instance, if a language model is too “politically correct,” then boosting the features that may activate its spicier side could effectively transform it into a radically different LLM, as if it had been trained from scratch. This ultimately results in a more flexible model, and an easier way of doing corrective maintenance when a bug is found.

Traditionally, AI models have been seen as black boxes—highly complex systems whose internal processes are not easily interpretable. Anthropic claims to have advanced into fully opening its model’s black box, providing a clearer view of the AI's cognitive processes.

Anthropic's research is a significant step towards demystifying AI, offering a glimpse into the complex cognitive processes of these advanced models. The company shared the results on Claude because the firm owns its weights, but independent researchers could take the open weights of any other LLM and adapt this technique to fine-tune a new model or understand how these open-source models process information.

"We believe that understanding the inner workings of large language models like Claude is crucial for ensuring their safe and responsible use," the researchers wrote.

Edited by Andrew Hayward