Vitalik Buterin, the mastermind behind Ethereum, called for a more cautious approach to AI research, calling the current forward frenzy "very risky." Responding to a critique of OpenAI and its leadership by Ryan Selkis—CEO of crypto intelligence firm Messari—the creator of the world's second most influential blockchain outlined his views on AI alignment—the core principles that should drive development.

"Superintelligent AI is very risky, and we should not rush into it, and we should push against people who try,” Buterin asserted. “No $7 trillion server farms, please.”

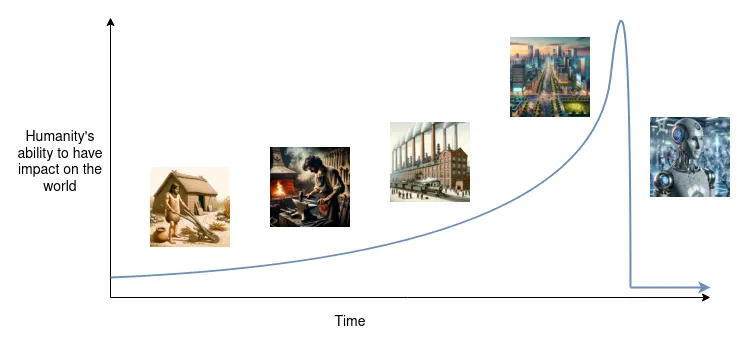

Superintelligent AI is a theoretical form of artificial intelligence that surpasses human intelligence in virtually all domains. While many see artificial general intelligence (AGI) as the final realization of the emerging technology's full potential, superintelligent models would be what comes next. While today’s state-of-the-art AI systems have not yet reached these thresholds, advancements in machine learning, neural networks, and other AI-related technologies continue to progress—and people are by turns excited and worried.

My current views:

1. Superintelligent AI is very risky and we should not rush into it, and we should push against people who try. No $7T server farms plz.

2. A strong ecosystem of open models running on consumer hardware are an important hedge to protect against a future where…— vitalik.eth (@VitalikButerin) May 21, 2024

After Messari tweeted that "AGI is too important for us to deify another smooth-talking narcissist,” Buterin emphasized the importance of a diverse AI ecosystem to avoid a world where the immense value of AI is owned and controlled by very few people.

“A strong ecosystem of open models running on consumer hardware [is] an important hedge to protect against a future where value captured by AI is hyper-concentrated and most human thought becomes read and mediated by a few central servers controlled by a few people,” he said. “Such models are also much lower in terms of doom risk than both corporate megalomania and militaries."

The Ethereum creator has been closely following the AI scene, recently praising the open-source LLM Llama3 model. He also suggested that OpenAI’s GPT-4o multimodal LLM could have passed the Turing test after a study argued that human responses were indistinguishable from those generated by the AI.

Buterin also addressed the categorization of AI models into "small" and "large" groups, with the focus on regulation of "large" models being a reasonable priority. However, he expressed concern that many current proposals could result in everything being classified as "large" over time.

Buterin's remarks come amid heated debates surrounding AI alignment and the resignations of key figures in OpenAI's super alignment research team. Ilya Sutskever and Jan Leike have left the company, with Leike accusing OpenAI CEO Sam Altman of prioritizing "shiny products'' over responsible AI development.

It was separately revealed that OpenAI has stringent non-disclosure agreements (NDAs) that prevent its employees from discussing the company after their departure.

High-level, long-view debates about super intelligent are turning more urgent, with experts expressing concern while making widely varying recommendations.

Paul Christiano, who formerly led the language model alignment team at OpenAI, has established the Alignment Research Center, a non-profit organization dedicated to aligning AI and machine learning systems with "human interests." As reported by Decrypt, Christiano suggested that there could be a "50/50 chance of catastrophe shortly after we have systems at the human level."

On the other hand, Yann LeCun, Meta's chief researcher, believes that such a catastrophic scenario is highly unlikely. Back in April 2023, he stated in a tweet, that the "hard take-off" scenario is "utterly impossible." LeCun asserted that short-term AI developments significantly influence the way AI evolves, shaping its long-term trajectory.

Buterin, instead, thinks of himself as a centrist. In an essay from 2023 —which he ratified today— he recognized that "it seems very hard to have a "friendly" superintelligent-AI-dominated world where humans are anything other than pets," but also argued that "often, it really is the case that version N of our civilization's technology causes a problem, and version N+1 fixes it. However, this does not happen automatically, and requires intentional human effort." In other words, if superintelligence becomse an issue, humans would probably find a way to deal with that.

The departure of OpenAI's more cautious alignment researchers and the company’s shifting approach to safety policies have raised broader, mainstream concerns about the lack of attention to ethical AI development among major AI startups. Indeed, Google, Meta, and Microsoft have also reportedly disbanded their teams responsible for ensuring that AI is being safely developed.

Edited by Ryan Ozawa.