An artificial intelligence (AI) model developed in China is making waves on a number of fronts, including its open-source nature and for its ability to handle up to 200,000 tokens of context—vastly exceeding other popular models like Anthropic's Claude (100,000 tokens) or OpenAI's GPT-4 Turbo (128,000 tokens).

Dubbed the Yi series, Beijing Lingyi Wanwu Information Technology Company created this progressive generative chatbot in its AI lab, 01.AI. The large language model (LLM) comes in two versions: the lightweight Yi-6B-200K and the more robust Yi-34B-200K, both capable of retaining immense conversational context and able to understand English and Mandarin.

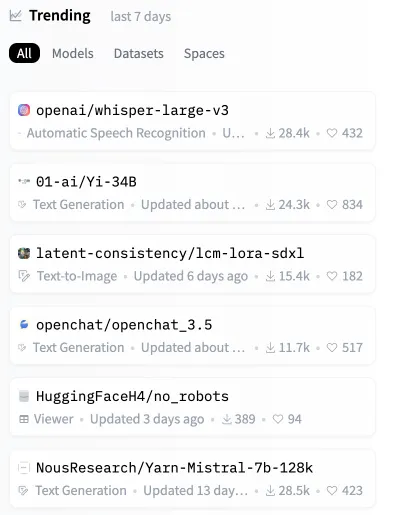

Just hours after its release, the Yi model rocketed up the charts to become the second most popular open-source model on Hugging Face, a key repository for AI models.

Even though the Yi models handle huge context prompts, they are also very efficient and accurate, beating other LLMs in several synthetic benchmarks.

"Yi-34B outperforms much larger models like LLaMA2-70B and Falcon-180B; also Yi-34B’s size can support applications cost-effectively, thereby enabling developers to build fantastic projects," explains 01.AI on its website. According to a scoreboard shared by the developers, the most powerful Yi model showed strong performance in reading comprehension, common-sense reasoning, and common AI tests like Gaokao and C-eval.

Large Language Models (LLMs) like the Yi Series operate by analyzing and generating language-based outputs. They work by processing “tokens,” or units of text, which can be as small as a word or a part of a word.

To say “200K tokens of context” effectively means the model can understand and respond to significantly longer prompts, which previously would have overwhelmed even the most advanced LLMs. The Yi Series can handle extensive prompts that include more complex and detailed information without crashing.

A recent third-party analysis, however, points out a limitation in this area. When a prompt occupies more than 65% of the Yi model's capacity, it can struggle to retrieve accurate information. Despite this, if the size of the prompt is kept well below this threshold, the Yi Series Model performs admirably, even in scenarios that cause degradation in models like Claude and ChatGPT.

Pressure Testing GPT-4-128K With Long Context Recall

128K tokens of context is awesome - but what's performance like?

I wanted to find out so I did a “needle in a haystack” analysis

Some expected (and unexpected) results

Here's what I found:

Findings:

* GPT-4’s recall… pic.twitter.com/nHMokmfhW5— Greg Kamradt (@GregKamradt) November 8, 2023

A key differentiator for Yi is that it is fully open source, allowing users to run Yi locally on their own systems. This grants them greater control, the ability to modify the model architecture, and avoids reliance on external servers.

"We predict that AI 2.0 will create a platform opportunity ten times larger than the mobile internet, rewriting all software and user interfaces,” 01.AI states. “This trend will give rise to the next wave of AI-first applications and AI-empowered business models, fostering AI 2.0 innovations over time."

By open-sourcing such a capable model, 01.AI empowers developers worldwide to build the next generation of AI. With immense context handling in a customizable package, we can expect a torrent of innovative applications utilizing Yi.

The potential is sky-high for open-source models like Yi-6B-200K and Yi-34B-200K. As AI permeates our lives, locally run systems promise greater transparency, security, and customizability compared to closed alternatives dependent on the cloud.

While Claude and GPT-4 Turbo grab headlines, this new open-source alternative may soon build AI's next stage right on users' devices. Just when it seemed like there were no remaining ways to upgrade our hardware, it might be time to shop for a more capable device before you find your local AI outclassed by a more "context-aware" competitor.