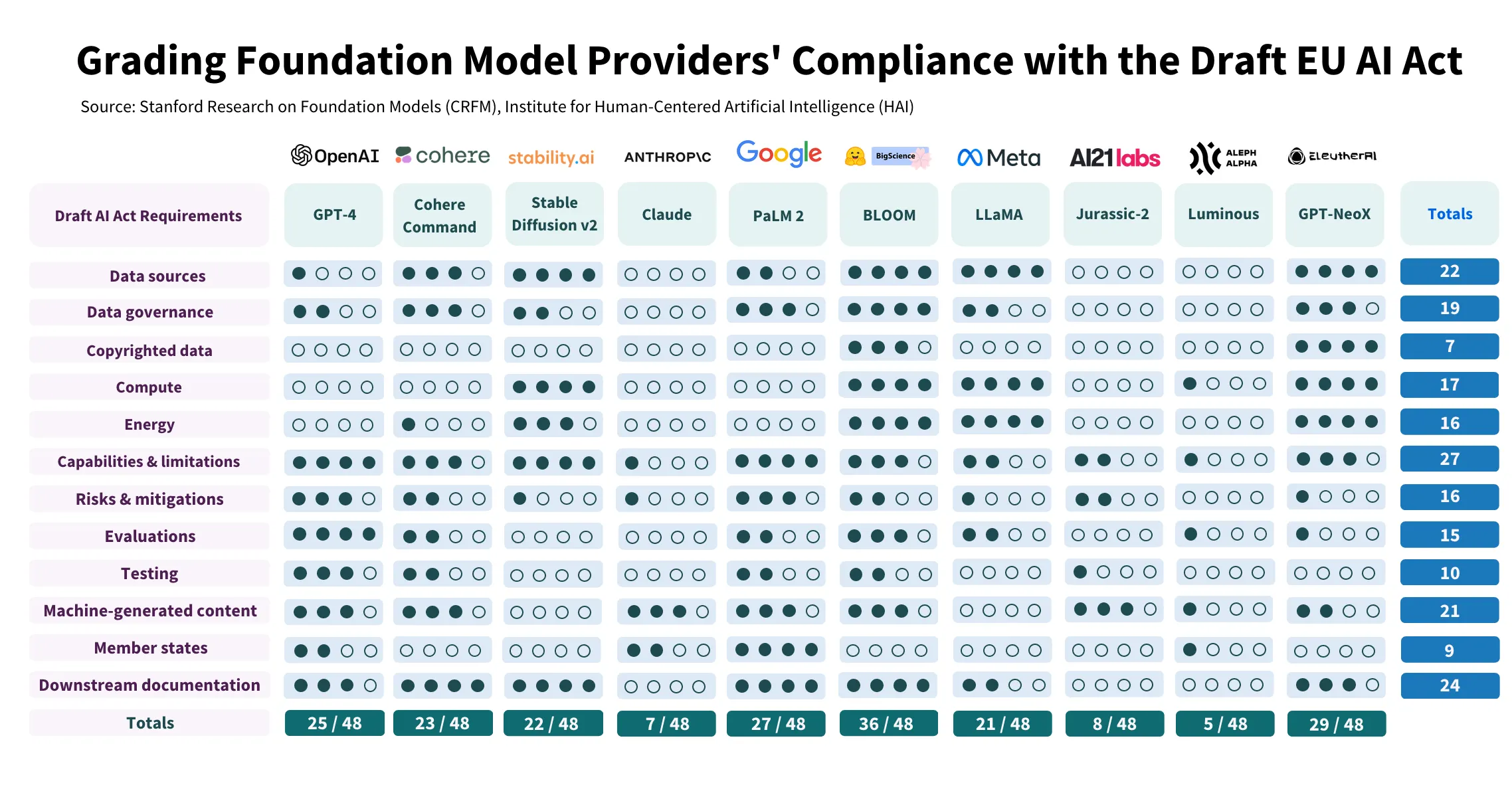

Stanford University researchers recently concluded that no current large language models (LLMs) used in AI tools like OpenAI's GPT-4 and Google's Bard are compliant with the European Union (EU) Artificial Intelligence (AI) Act.

The Act, the first of its kind to govern AI at a national and regional level, was just adopted by the European Parliament. The EU AI Act not only regulates AI within the EU, encompassing 450 million people, but also serves as a pioneering blueprint for worldwide AI regulations.

But, according to the latest Stanford study, AI companies have a long road ahead of them if they intend to achieve compliance.

In their investigation, the researchers assessed ten major model providers. They evaluated the degree of each providers' compliance with the 12 requirements outlined in the AI Act on a 0 to 4 scale.

The study revealed a wide discrepancy in compliance levels, with some providers scoring less than 25% for meeting the AI Act requirements, and only one provider, Hugging Face/BigScience, scoring above 75%.

Clearly, even for the high-scoring providers, there is room for significant improvement.

The study sheds light on some crucial points of non-compliance. A lack of transparency in disclosing the status of copyrighted training data, the energy used, emissions produced, and the methodology to mitigate potential risks were among the most concerning findings, the researchers wrote.

Furthermore, the team found an apparent disparity between open and closed model releases, with open releases leading to more robust disclosure of resources but involving greater challenges monitoring or controlling deployment.

Stanford concluded that all providers could feasibly enhance their conduct, regardless of their release strategy.

In recent months, there has been a noticeable reduction in transparency in major model releases. OpenAI, for instance, made no disclosures regarding data and compute in their reports for GPT-4, citing a competitive landscape and safety implications.

Europe’s AI Regulations Could Shift the Industry

While these findings are significant, they also fit a broader developing narrative. Recently, OpenAI has been lobbying to influence the stance of various countries towards AI. The tech giant even threatened to leave Europe if the regulations were too stringent—a threat it later rescinded. Such actions underscore the complex and often fraught relationship between AI technology providers and regulatory bodies.

The researchers proposed several recommendations for improving AI regulation. For EU policymakers, this includes ensuring that the AI Act holds larger foundation model providers to account for transparency and accountability. The need for technical resources and talent to enforce the Act is also highlighted, reflecting the complexity of the AI ecosystem.

According to the researchers, the main challenge lies in how quickly model providers can adapt and evolve their business practices to meet regulatory requirements. Without strong regulatory pressure, they observed, many providers could achieve total scores in the high 30s or 40s (out of 48 possible points) through meaningful but plausible changes.

The researchers' work offers an insightful look into the future of AI regulation. They argue that if enacted and enforced, the AI Act will yield a significant positive impact on the ecosystem, paving the way for more transparency and accountability.

AI is transforming society with its unprecedented capabilities and risks. As the world stands on the cusp of regulating this game-changing technology, it's becoming increasingly clear that transparency isn't merely an optional add-on—it's a cornerstone of responsible AI deployment.