Brace yourselves: AI may cause humankind to go extinct.

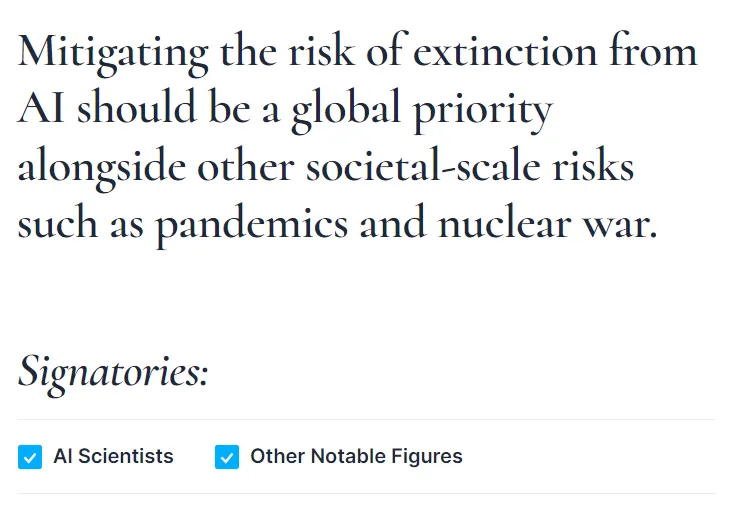

On Tuesday, hundreds of AI industry leaders and researchers —including executives from Microsoft, Google, and OpenAI— sounded a sobering warning. They claim that the artificial intelligence technology they are engineering could one day pose a real and present danger to humanity's existence. Alongside the horrors of pandemics and nuclear wars, they consider AI a societal risk of similar magnitude.

In a letter published by the Center for AI Safety, the AI specialists offered this statement characterized by its stark brevity: "Mitigating the risk of extinction from AI should be a global priority alongside other societal-scale risks such as pandemics and nuclear war."

That’s it, that’s all they say.

The statement paints AI as an imminent threat, akin to a nuclear disaster or a global pandemic. But the signatories, these wizards of the technology industry, failed to expand upon their ominous warning.

How exactly is this end-of-days scenario supposed to go down? When should we mark our calendars for the rise of our robot overlords? Why would AI, an invention of human innovation, turn on its creators? The silence from these architects of artificial intelligence was resounding, and they provided no answers.

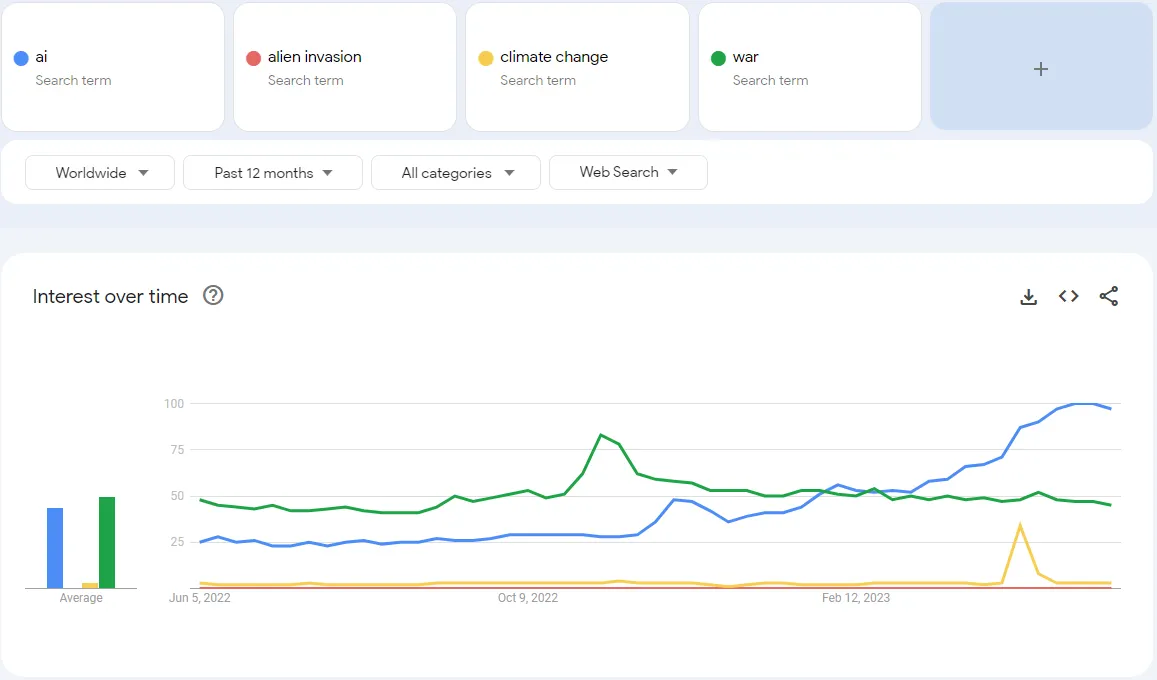

Indeed, those industry leaders were no more informative than a chatbot with a canned response. In the realm of global threats, AI seems to have suddenly jumped the queue, beating climate change, geopolitical conflicts, and even alien invasions in terms of Google keyword searches.

Interestingly, companies tend to advocate for regulations when it aligns with their interests. This could be viewed as their way of saying, "We wish to have a hand in shaping these regulations." It's akin to the fox petitioning for new rules in the hen house.

It’s also notable that OpenAI’s CEO, Sam Altman, has been pushing for regulations in the US. Still, he threatened to leave Europe if the continent’s politicians kept trying to regulate AI. “We’re gonna try to comply,” Altman said in a panel at the University College London. “If we can comply, we will. And if we can’t, we’ll cease operating.”

Fair to say, he then backtracked a couple of days later, saying that OpenAI had no plans to leave Europe. This, of course, happened after he got the chance to talk to regulators about the issue in a “very productive week.”

very productive week of conversations in europe about how to best regulate AI! we are excited to continue to operate here and of course have no plans to leave.

— Sam Altman (@sama) May 26, 2023

AI Is Risky, But Is It That Risky?

The potential hazards of artificial intelligence have not gone unnoticed among experts. A previous open letter signed by 31,810 endorsers, including Elon Musk, Steve Wozniak, Yuval Harari, and Andrew Yang called for a pause in the training of Powerful AI models.

“These protocols should ensure that systems adhering to them are safe beyond a reasonable doubt,” the letter says, clarifying that “This does not mean a pause on AI development in general, merely a stepping back from the dangerous race to ever-larger unpredictable black-box models with emergent capabilities.”

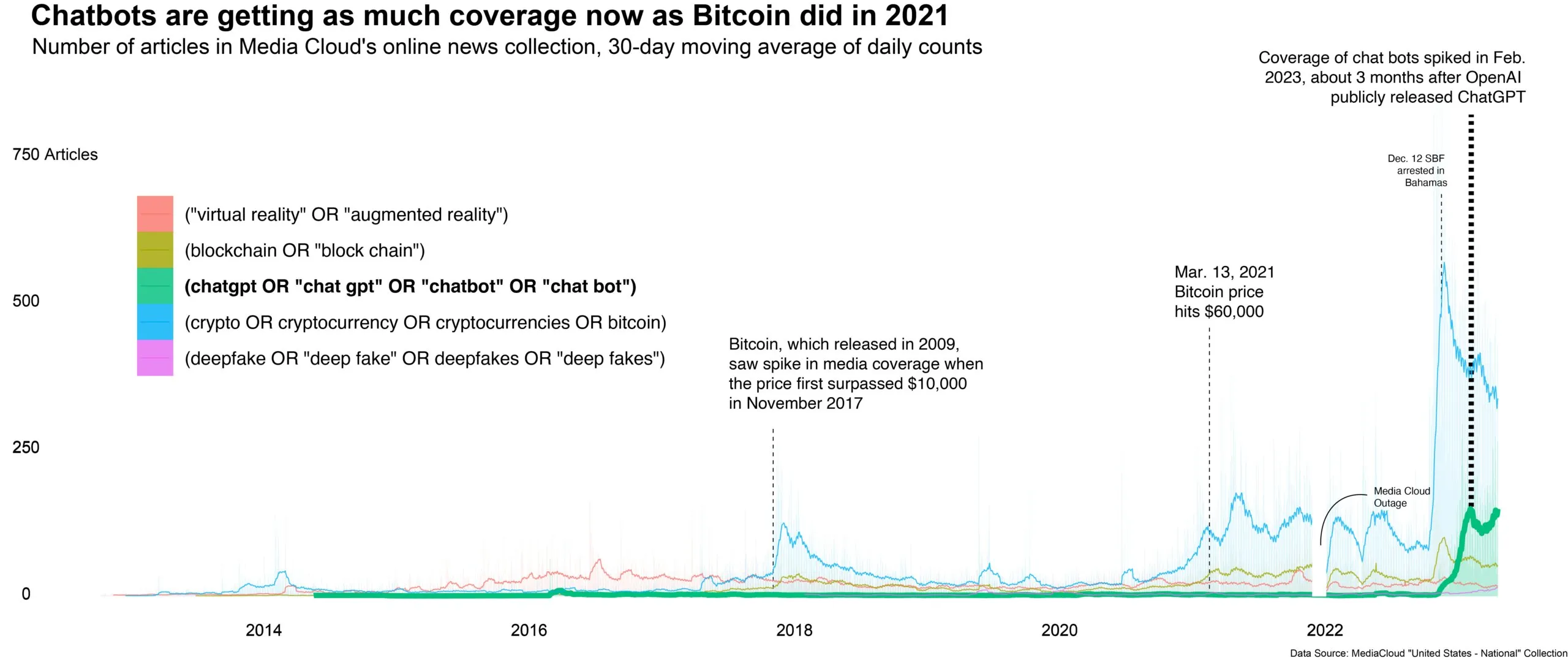

The issue of a potential AI Foom, (in which an AI become capable of improving its own systems, increasing its capabilities to the point that it surpass human intelligence), has been discussed for years. However, the rapid pace of change today, coupled with substantial media coverage, has thrust the debate into the global spotlight.

This has generated diverse views on how AI will (not merely could) impact the future of social interactions.

Some envision a utopian era where aligned AI and humans interact, and technological advancement reigns supreme. Others believe humanity will adapt to AI, with new jobs created around the technology, similar to the job growth that followed the invention of the automobile. Yet others maintain that AI stands a significant chance of maturing and becoming uncontrollable, posing a real threat to humanity.

Until then, it’s business as usual in the world of AI. Keep your eye on your ChatGPT, your Bard, or your Siri —they might be just one software update away from ruling the world. But for now, it seems that humanity’s biggest threat is not our own inventions, but rather our boundless talent for hyperbole.