The team at Novasky, a ”collaborative initiative led by students and advisors at UC Berkeley’s Sky Computing Lab,” has done what seemed impossible just months ago: They've created a high-performance AI reasoning model for under $450 in training costs.

Unlike traditional LLMs that simply predict the next word in a sentence, so-called “reasoning models” are designed to understand a problem, analyze different approaches to solve it, and execute the best solution. That makes these models harder to train and configure, because they must “reason” through the whole problem-solving process instead of just predicting the best response based on their training dataset.

That’s why a ChatGPT Pro subscription, which runs the latest o3 reasoning model, costs $200 a month—OpenAI argues that these models are expensive to train and run.

The new Novasky model, dubbed Sky-T1, is comparable to OpenAI’s first reasoning model, known as o1—aka Strawberry—which was released in September 2024, and costs users $20 a month. By comparison, Sky-T1 is a 32 billion parameter model capable of running locally on home computers—provided you have a beefy 24GB GPU, like an RTX 4090 or an older 3090 Ti. And it’s free.

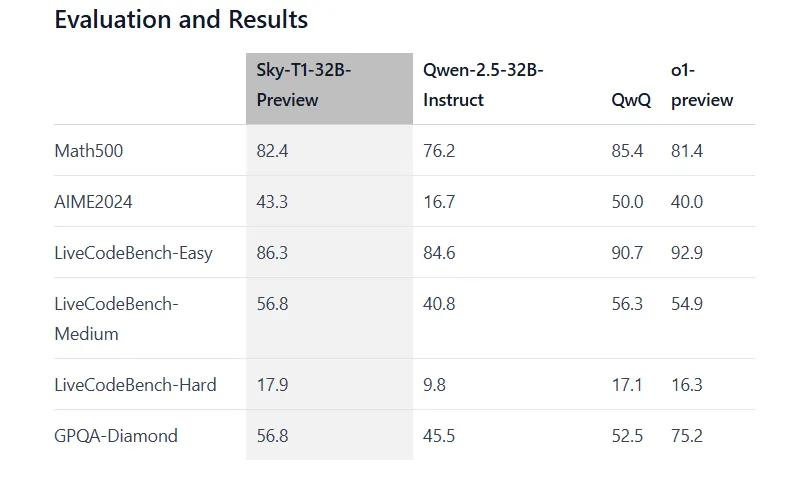

We're not talking about some watered-down version. Sky-T1-32B-Preview achieves 43.3% accuracy on AIME2024 math problems, edging out OpenAI o1’s 40%. On LiveCodeBench-Medium, it scores 56.8% compared to o1-preview's 54.9%. The model maintains strong performance across other benchmarks too, hitting 82.4% on Math500 problems where o1-preview scores 81.4%.

The timing couldn't be more interesting. The AI reasoning race has been heating up lately, with OpenAI's o3 turning heads by outperforming humans on general intelligence benchmarks, sparking debates about whether we're seeing early AGI or artificial general intelligence. Meanwhile, China's Deepseek v3 made waves last year by outperforming OpenAI's o1 while using fewer resources and also being open-source.

🚀 Introducing DeepSeek-V3!

Biggest leap forward yet:

⚡ 60 tokens/second (3x faster than V2!)

💪 Enhanced capabilities

🛠 API compatibility intact

🌍 Fully open-source models & papers🐋 1/n pic.twitter.com/p1dV9gJ2Sd

— DeepSeek (@deepseek_ai) December 26, 2024

But Berkeley's approach is different. Instead of chasing raw power, the team focused on making a powerful reasoning model accessible to the masses as cheaply as possible, building a model that is easy to fine-tune and run on local computers without pricey corporate hardware.

“Remarkably, Sky-T1-32B-Preview was trained for less than $450, demonstrating that it is possible to replicate high-level reasoning capabilities affordably and efficiently. All code is open-source,” Novasky said in its official blog post.

Currently OpenAI doesn’t offer access to its reasoning models for free, though it does offer free access to a less sophisticated model.

The prospect of fine-tuning a reasoning model for domain-specific excellence at under $500 is especially compelling to developers, since such specialized models can potentially outperform more powerful general-purpose models in targeted domains. This cost-effective specialization opens new possibilities for focused applications across scientific fields.

The team trained their model for just 19 hours using Nvidia H100 GPUs, following what they call a "recipe" that most devs ought to be able to replicate. The training data looks like a greatest hits of AI challenges.

“Our final data contains 5K coding data from APPs and TACO, and 10k math data from AIME, MATH, and Olympiads subsets of the NuminaMATH dataset. In addition, we maintain 1k science and puzzle data from STILL-2,” Novasky said.

The dataset was varied enough to help the model think flexibly across different types of problems. Novasky used QwQ-32B-Preview, another open-source reasoning AI model, to generate the data and fine-tune a Qwen2.5-32B-Instruct open-source LLM. The result was a powerful new model with reasoning capabilities, which would later become what Sky-T1.

A key finding from the team's work: bigger is still better when it comes to AI models. Their experiments with smaller 7 billion and 14 billion parameter versions showed only modest gains. The sweet spot turned out to be 32 billion parameters—large enough to avoid repetitive outputs, but not so massive that it becomes impractical.

If you want to have your own version of a model that beats OpenAI o1, you can download Sky-T1 on Hugging Face. If your GPU isn’t powerful enough but you still want to try it out, there are quantized versions that go from 8 bits all the way down to 2 bits, so you can trade in accuracy for speed and test the next best thing on your potato PC.

Just be aware: The developers warn that such levels of quantization are “not recommended for most purposes.”

Edited by Andrew Hayward