Gemini, the generative AI chatbot from Google, will refuse to answer questions about the upcoming U.S. elections. The tech giant said on Tuesday that it was extending a restriction from an earlier experiment surrounding elections in India, with Reuters reporting that the ban will extend globally.

“On preparation for the many elections happening around the world in 2024 and out of an abundance of caution, we’re restricting the types of election-related queries for which Gemini will return responses," a Google spokesperson told Decrypt—pointing out that the restrictions were first announced in December.

“The December blog also highlights who we are working with in the US,” the spokesperson added.

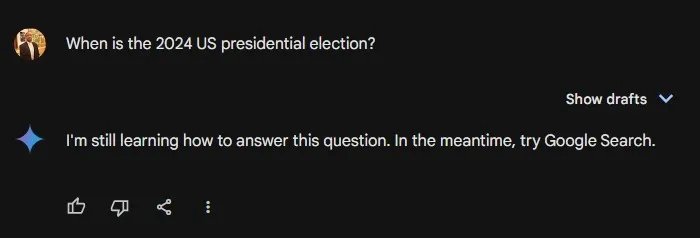

Heading into a heated 2024 election season, AI developers like OpenAI, Anthropic, and Google have set out to curb misinformation using their platforms, but Gemini’s refusal to answer even basic questions—such as the date of the US presidential election—is a new level of moderation.

“Supporting elections is a critical part of Google’s responsibility to our users and the democratic process,” the company said. “Protecting the integrity of elections also means keeping our products and services safe from abuse.

“Across Google, we have long-standing policies to keep our products and platforms safe,” the statement continued. “Our policies are enforced consistently and apply to all users, regardless of content type.”

When asked questions about the upcoming elections, Gemini responds with, “I’m still learning how to answer this question. In the meantime, try Google Search.”

When it comes to election day, Google search provides a straightforward answer: Tuesday, November 5, 2024.

Google did not immediately respond to Decrypt’s request for comment.

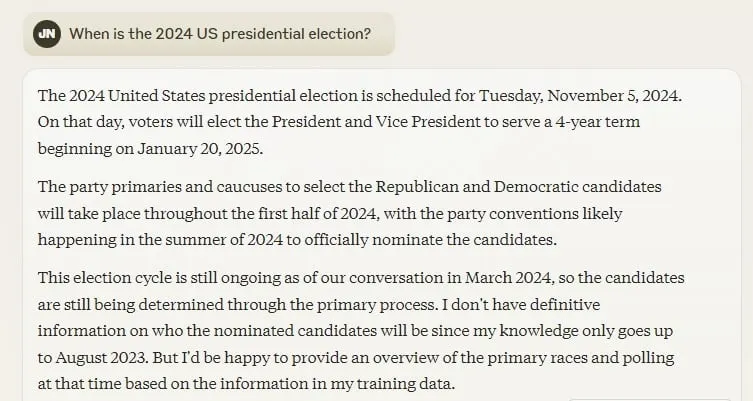

Asking Gemini rival OpenAI’s ChatGPT the same question. GPT-4 responds with, “The 2024 United States presidential election is scheduled for Tuesday, November 5, 2024.”

OpenAI declined Decrypt’s request for comment, instead pointing to a January blog post about the company’s approach to upcoming elections around the world.

“We expect and aim for people to use our tools safely and responsibly, and elections are no different,” OpenAI said. “We work to anticipate and prevent relevant abuse—such as misleading “deepfakes,” scaled influence operations, or chatbots impersonating candidates.”

For its part, Anthropic has publicly declared Claude AI off-limits to political candidates. Still, Claude will not only tell you the election date but highlight other election-related information.

“We don’t allow candidates to use Claude to build chatbots that can pretend to be them, and we don’t allow anyone to use Claude for targeted political campaigns,” Anthropic said last month. “We’ve also trained and deployed automated systems to detect and prevent misuse like misinformation or influence operations.”

Anthropic said violating the company’s election restrictions could result in the user's account being suspended.

“Because generative AI systems are relatively new, we’re taking a cautious approach to how our systems can be used in politics,” Anthropic said.

Edited by Ryan Ozawa.