Nvidia flexed its AI muscles this week, unveiling a slew of next-generation products to usher in the new era of artificial intelligence. From what it described as a breakthrough AI-focused superchip to more intuitive developer tools, Nvidia clearly intends to remain the engine powering the AI revolution.

This year’s Nvidia presentation at SIGGRAPH 2023—an annual conference devoted to computer graphics technology and research—was almost entirely about AI. Nvidia CEO Jensen Huang said that generative AI represents an inflection point akin to the internet revolution decades ago. He said the world is moving towards a new era in which most human to computer interactions will be powered by AI.

“Every single application, every single database, whatever you interact with within a computer, you’ll likely be first engaging with a Large Language model,” Huang said.

By combining software and specialized hardware, Nvidia is positioning itself as the missing link realizing the full potential of AI.

Grace Hopper Superchip debuts for AI training

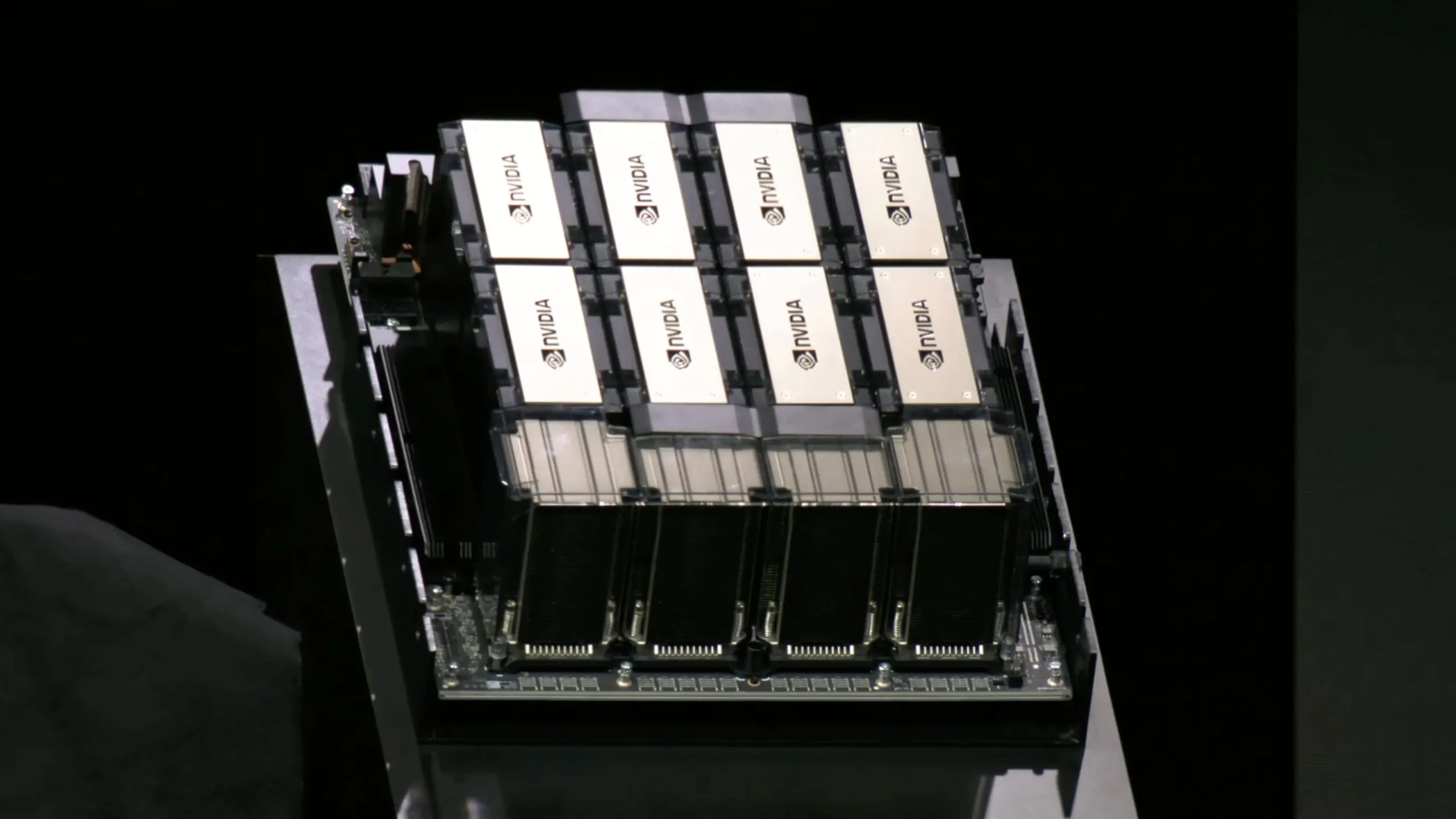

The star of the show was the new Grace Hopper Superchip GH200, the first GPU with High Bandwidth Memory 3e (HBM3e). With up to 2TB/s of bandwidth, HBM3e provides nearly three times the bandwidth of the previous generation HBM2e.

Nvidia defines its Grace Hopper chip as an “accelerated CPU designed from the ground up for giant-scale AI and high-performance computing (HPC) applications.” The chip is the result of combining Nvidia’s Grace (high performing CPUs) and Hopper (high performing GPUs) architectures, its name evoking that of the famed female American computer scientist.

The GH200 can deliver up to six times the training performance of Nvidia's flagship A100 GPU for large AI models, according to Huang. The GH200 is expected to be available in Q2 2024.

"GH200 is a new engine for training and inference," Huang said, adding that "future frontier models will be built this way." He said this new superchip “probably even runs Crysis”—a first-person shooter video game with notoriously heavy hardware requirements.

Ada Lovelace GPU Architecture Comes to Workstations

Nvidia also had some news for home users. The chip manufacturer unveiled its newest RTX workstation GPUs based on its Ada Lovelace architecture: the RTX 5000, RTX 4500 and RTX 4000. With up to 7680 CUDA cores, these GPUs deliver up to 5x the performance over previous generation boards for AI development, 3D rendering, video editing and other demanding professional workflows.

The flagship RTX 6000 Ada remains the top choice for professionals requiring maximum performance. The new lineup expands the Ada Lovelace architecture to a broader range of users, however. The RTX 4000, 4500 and 5000 will be available starting in Q3 2022 from major OEMs.

However, these new offerings are not cheap. Pricing for the RTX 4000 starts at $1,250 and the RTX 5000 at around $4,000.

For professionals and enterprises taking their AI initiatives to the next level, Nvidia unveiled its new data-center scale GPU Nvidia L40. With up to 18,176 CUDA cores and 48 GB of vRAM, the L40 provides up to 9.2X higher AI training performance than the A100.

Nvidia says global server manufacturers plan to offer the L40 in their systems, allowing businesses to train gigantic AI models with optimal efficiency and cost savings. Paired with Nvidia software, the L40 could provide a complete solution for organizations embracing AI.

Cloud-native microservices elevate video communications

Continuing its push into powering video applications, Nvidia also announced a new suite of GPU-accelerated software development kits and a cloud-native service for video editing called Maxine.

Powered by AI, Maxine offers capabilities like noise cancellation, super resolution upscaling, and simulated eye contact for video calls, allowing remote users to have natural conversations from nearly anywhere.

Nvidia says visual storytelling partners have already integrated Maxine into workflows like video conferencing and video editing.

Toolkit simplifies generative AI development

Finally, Nvidia announced the upcoming release of AI Workbench, a unified platform that streamlines developing, testing and deploying generative AI models.

By providing a single interface to manage data, models, and resources across machines, AI Workbench enables seamless collaboration and scaling from a local workstation up to cloud infrastructure.

With its latest slate of offerings spanning hardware, software and services, Nvidia says it intends to accelerate enterprise adoption of AI through a comprehensive technology stack built for tackling its many complexities.