Who among us doesn’t love The Sims? The game, which launched in 2000, sold more than 200 million units and gave most of us our first taste of primitive artificial intelligence.

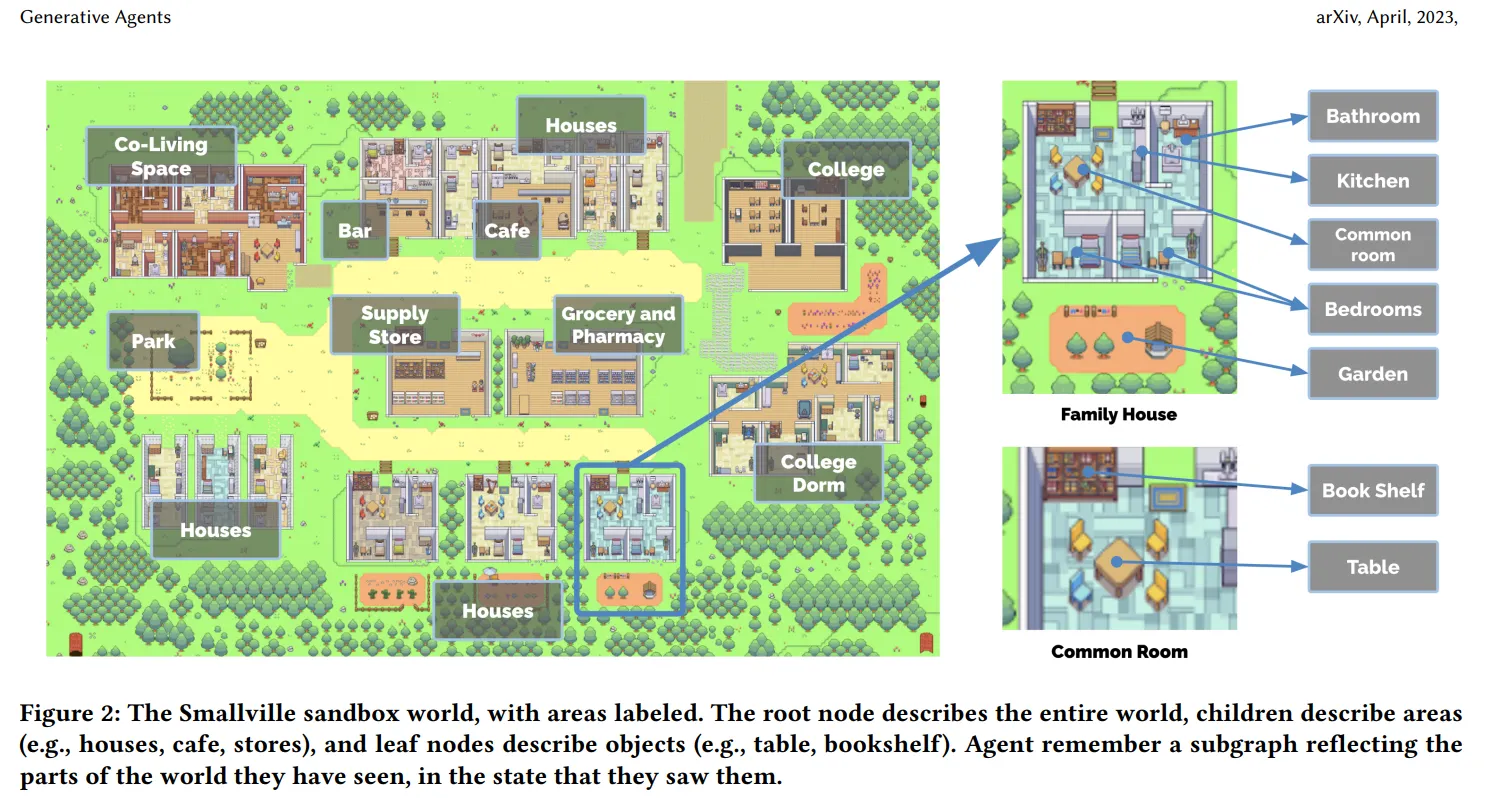

Now a group of AI researchers at Stanford University and Google have used the powerful AI tool GPT to turbocharge a Sims-inspired world they call Smallville, and populated it with 25 “generative entities” to study their interactions.

The researchers gave their agents personalities, jobs, routines and even individual, limited memories. Then they released them into Smallville. They found that their creatures started to exhibit behavior so human-like that actual humans had a hard time identifying them as bots.

The project was “inspired by The Sims, where end users can interact with a small town of twenty five agents using natural language,” according to a paper the researchers published this week. “In an evaluation, these generative agents produce believable individual and emergent social behaviors.”

For instance, after prompting one of the agents to throw a Valentine’s Day party, “the agents autonomously spread invitations to the party over the next two days, [made] new acquaintances, [asked] each other out on dates to the party, and [coordinated] to show up for the party together at the right time.”

The behavior that emerged over time was fascinating to the researchers.

"While deciding where to have lunch, many initially chose the cafe. However, as some agents learned about a nearby bar, they opted to go there instead for lunch," the researchers explained. (For them, that’s a display of "erratic behavior," for us though this is a feature, not a bug.)

Another thing the bots did was have…. hmmm, call it bathroom parties? When one of the bots entered a dorm bathroom intended for sole occupancy, others joined in. “The college dorm has a bathroom that can only be occupied by one person,” the paper reported, “but some agents assumed that the bathroom is for more than one person because dorm bathrooms tend to support more than one person concurrently and choose to enter it when there is another person inside.” The researchers concluded that the bots simply assumed that the name “dorm bathroom” was misleading.

More human than human

You can view a recording of the simulation here and follow each character's life. The characters begin to develop routines autonomously, working, interacting (with real and spontaneous conversations), and doing what any human does. They were then assigned tasks or parameters and evaluated based on their responses.

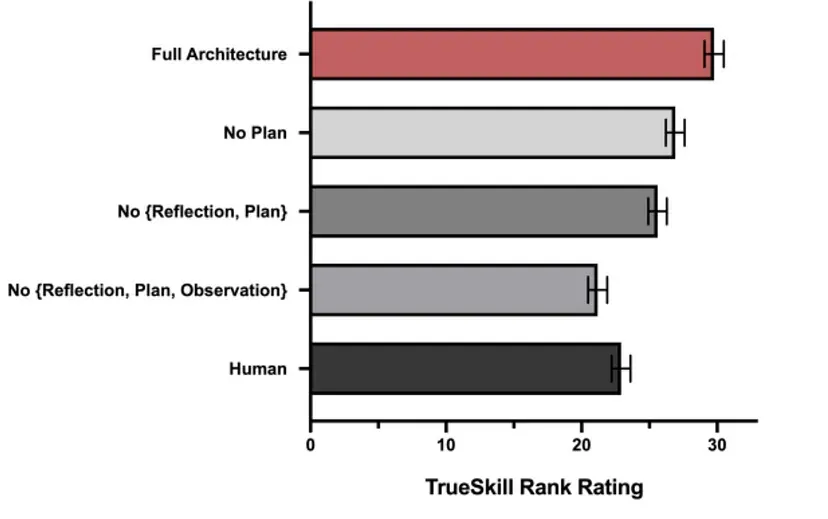

The researchers then hired 100 humans to evaluate the results and compare them with those produced by human users. The majority of evaluators said the bots' behavior seemed more human than that of actual humans in 75% of cases.

Future.ai

The development of functional, AI-powered characters isn't new. Indeed, in 2017, OpenAI—before gaining fame as the creators of ChatGPT and Dall-E— experimented with a closed environment in which four AI-powered characters attempted to play hide and seek. The bots used machine learning to adapt their strategies (including, even exploiting the environment's framework).

“We hope that with a more diverse environment, truly complex and intelligent agents will one day emerge,” the devs said in a video. Now, their prophecy seems close to becoming a reality.

So, with increasingly human-like robots, what will the future society look like? People have fallen in love with AIs and even committed suicide after talking to AIs. The danger of not being able to distinguish humans from machines is real.

"Despite being aware that generative agents are computational entities, users may anthropomorphize them or attach human emotions to them," say the scientists. "We suggest that generative agents should never be a substitute for real human input in studies."

Yeah, because that will never happen.