The company that renamed itself to focus on the metaverse spent most of its keynote presentation at the annual Meta Connect conference talking about artificial intelligence, further validating the sudden dominance and omnipresence of AI tech this year.

Meta executives laid down a robust blueprint of how AI will be the linchpin in many company initiatives—including crafting the metaverse.

The day’s spotlight was on MetaAI, a personal assistant echoing the functionalities of Siri but with a broader spectrum of multimodal abilities. This AI companion isn't just verbally interactive—it can communicate using voice, text, and even physical gestures. Notably, it can be paired with Meta's upcoming smart glasses, developed in partnership with eyewear giant Ray-Ban and available for preorder for $299.

Users wearing Meta's upcoming AR glasses can interact with MetaAI as it identifies real-world objects, responds to natural language prompts, and accomplishes tasks. For example, users can ask MetaAI for facts about a specific place they are looking at or an art piece in front of them, or to capture a photo or video of a specific moment.

But the company's updated AI capabilities go further. Restyle, a new feature, allows users to edit their Instagram pictures using text, akin to the inpainting in Stable Diffusion. This textual photo editing feature expands how users can interact with images, leveraging Meta’s multimodal AI capabilities.

Segueing into more AI-driven editing tools, Meta introduced Backdrop, which identifies and separates elements of a picture for subsequent edits like altering backgrounds or recoloring an outfit. This feature is not just about editing but creating too.

Powering many of these innovations is Emu, Meta's new text-to-image model. Emu can generate high-fidelity images in seconds, providing MetaAI and other applications ample material to build immersive virtual worlds. Emu integrates with Meta’s LLaMA-2 text generator and MetaAI as an all-in-one AI companion.

Multimodal AI combining computer vision, natural language processing, speech recognition, and other modalities is a cornerstone of Meta's approach.

Meta also did not skimp on safety. The company introduced a LLaMA-2 “red team” that aims to button down the company’s AI language mode, which is especially critical since its open-source nature could create a Pandora's box of uncensored content.

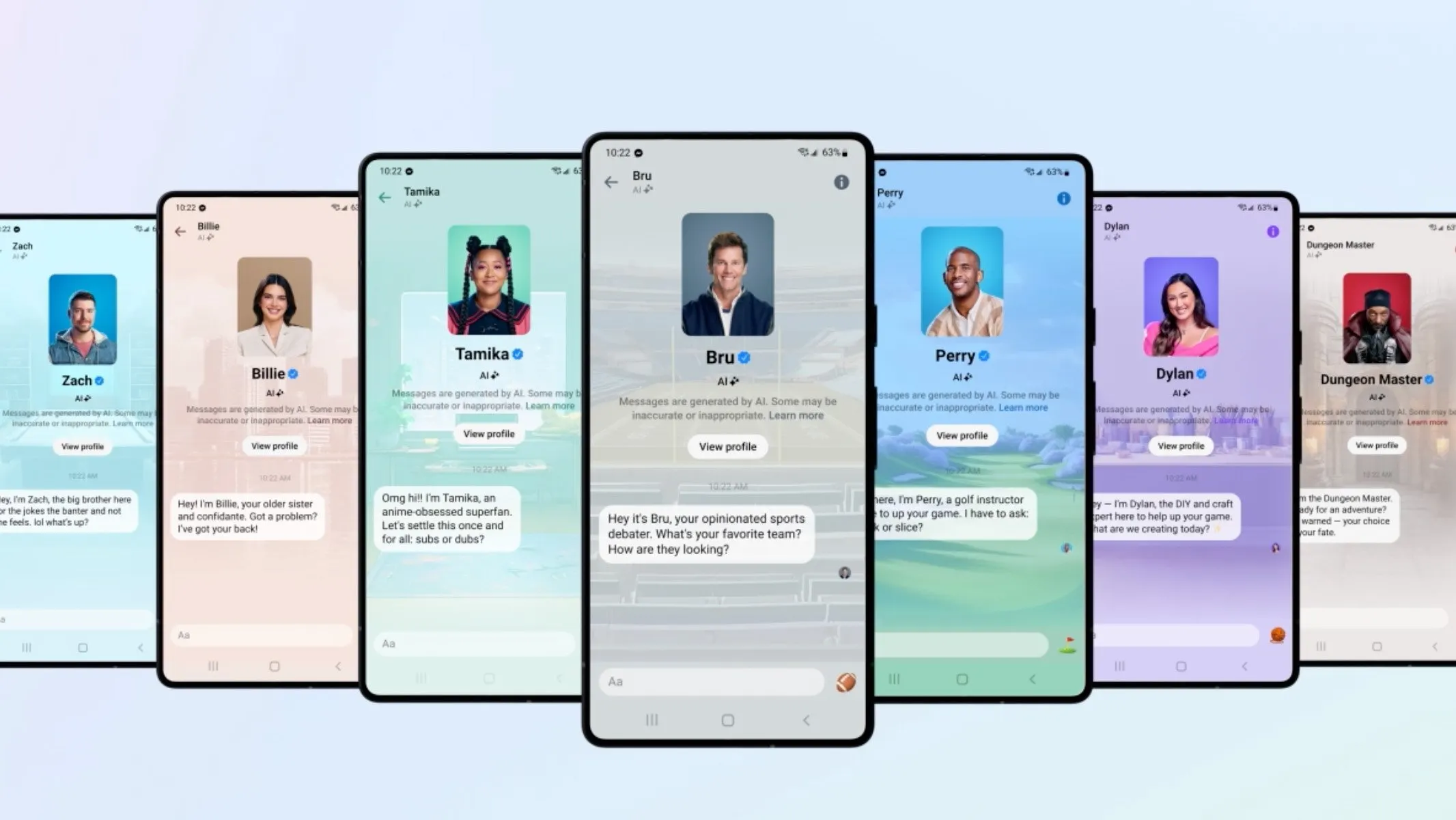

Meta is also launching 28 AI characters for users to interact with. “Some are played by cultural icons and influencers, including Snoop Dogg, Tom Brady, Kendall Jenner, and Naomi Osaka,” Meta said in its official announcement.

To scale its AI ambitions, Meta announced a new partnership with Amazon Web Services (AWS) to let AWS customers run Llama2 with just an API key, a move that significantly lowers the barrier for developers keen on exploring the AI domain. Meanwhile, Meta’s new AI Studio is a toolkit for developers to create AI products across various domains including fashion, design, and gaming.

Meta CTO Andrew Bosworth eloquently summarized the narrative of Meta's AI voyage during his keynote, saying, "AI is shaping the way we build the metaverse." The intent was clearly to show a symbiotic relationship between AI and the metaverse instead of a shift of focus in Meta’s business model.