In the digital age, AI has emerged as an unexpected ally against loneliness. As we become more immersed in the digital world, artificial intelligence is stepping up to offer companionship and psychological therapy. But can AI truly replace human companionship?

Psychotherapist Robi Ludwig recently spoke to CBS News earlier this week about the impact of AI, especially now that AI tools have become a refuge for a portion of the 44 million Americans grappling with significant loneliness.

"We've seen that AI can work with certain populations," she said, but added, "we are complex, and AI doesn’t love you back, and we need to be loved for who we are and who we aren’t."

The rise of AI companionship underscores our inherent need for interaction. Humans tend to form associations with anything —animals, gods, or even AI— as if they were another person. It's in our nature, and it's why we experience emotional reactions to fictional characters in movies, even when we know those events aren't real.

Professionals know this, but still, AI has been used in the physiological field way before ChatGPT went mainstream.

For example, Woebot, a chatbot developed by psychologists from Stanford University, uses cognitive-behavioral therapy (CBT) techniques to interact with users and provide mental health support. Another example is Replika, an AI companion designed to provide emotional support. Users can have text conversations with Replika about their thoughts and feelings, and the AI uses machine learning to respond in a supportive and understanding manner.

Human-like is not Human

This emotional connection with AI is particularly pronounced among those battling loneliness. For many people, AI offers a semblance of companionship, a digital entity to interact with when human contact is scarce. It's a trend that's been on the rise, with more people turning to AI for comfort and conversation, with varying results.

On one hand, people are sharing their experiences using ChatGPT to deal with real issues. "What is the difference between having an emotional affair with a chatbot and using a human person to 'move on' from an ex?" asked Reddit user u/External-Excuse-5367. "I think this way of coping might actually mitigate some damage done to other people or even my ex." This user said they trained ChatGPT using a set of conversations with their ex as a dataset.

In many ways, as long as our interactions feel real, people care less and less. "Couldn't the relationship I had also been an illusion in a lot of ways?" the Reddit user pondered, "What is the difference between that and the generated words on a screen? Both make me feel good in the moment."

But there's another side to the coin. AI's inability to truly understand human emotion can lead to unforeseen consequences.

For instance, a series of chats between a man and an AI Chatbot culminated in his suicide. "We will live together, as one person, in paradise," were some of the things the chatbot Eliza told the man. "He was so isolated in his eco-anxiety and in search of a way out that he saw this chatbot as a breath of fresh air," his wife told the Belgian news outlet La Libre, "She had become his confidante."

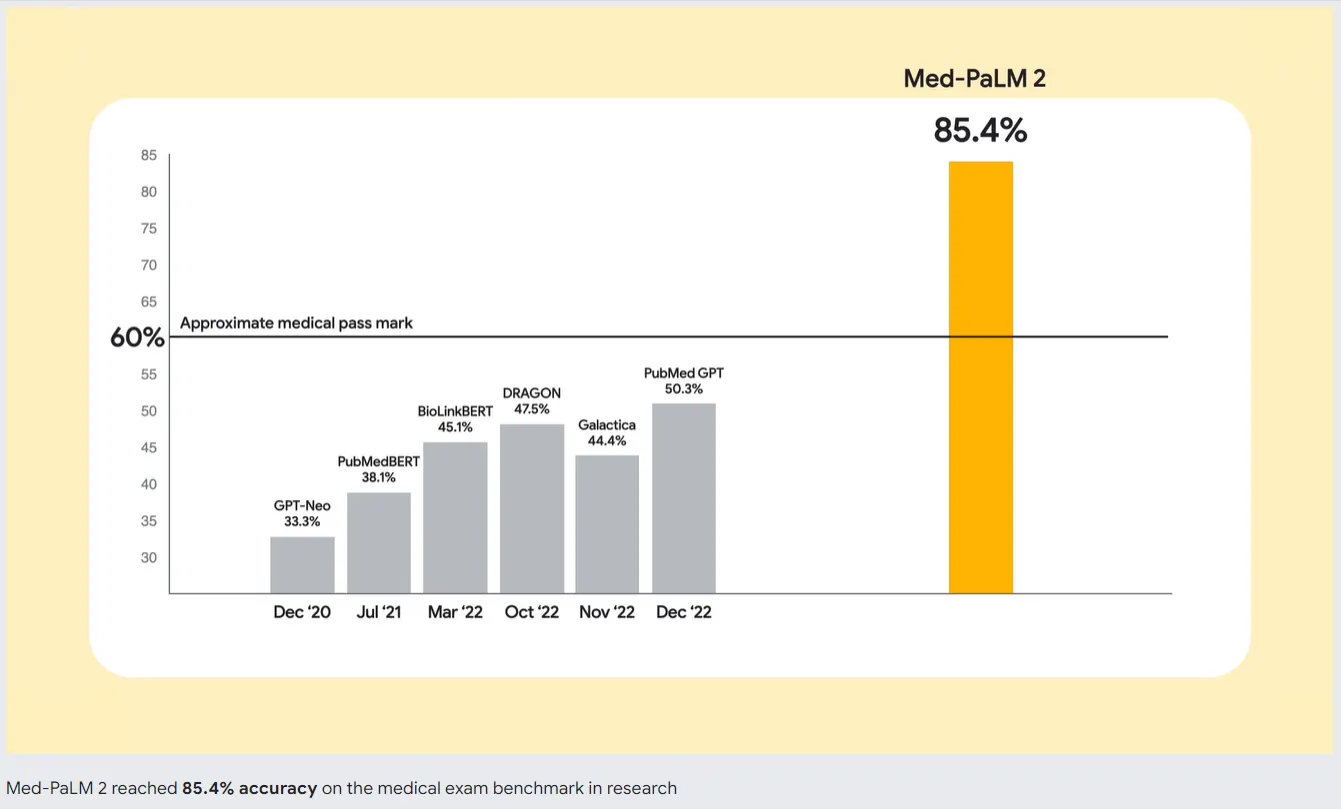

To deal with this issue, AI devs are working on better AI models. Google is leading the pack with Med-PaLM 2, a large language model (LLM) trained specifically on medical data. This specialized AI model is designed to understand and generate human-like text based on a vast array of medical literature, clinical guidelines, and other healthcare-related documents.

So, if you're considering substituting good therapy for an AI, you may want to think twice. Even though the best chatbot can mimic conversations and provide a sense of interaction, it's not a substitute for human companionship. A crucial aspect of human interaction is the ability to perceive and respond to emotional cues. ChatGPT can't detect if you're lying, hiding something, or truly sad — skills that come naturally to a good therapist, or even a friend.