In brief

- Actor Jamie Lee Curtis called out Meta CEO Mark Zuckerberg after discovering a fake AI-generated ad used her likeness without consent.

- The ad manipulated past interview footage to falsely portray her endorsing a product she never approved.

- Meta removed the ad within hours, as backlash over unauthorized deepfakes targeting public figures continues to grow.

Academy Award-winning actor Jamie Lee Curtis publicly confronted Meta CEO Mark Zuckerberg on Monday after discovering that her likeness had been used without permission in an AI-generated advertisement .

“It’s come to this @zuck,” Curtis wrote on Instagram, tagging Zuckerberg directly after unsuccessfully attempting to contact Meta and the CEO through private channels.

The ad, which Curtis did not name, repurposed footage from a past MSNBC interview she gave during the Los Angeles wildfires, altering her speech with artificial intelligence to promote an undisclosed product.

Curtis said the result was a misrepresentation that compromised her reputation for truth-telling and personal integrity.

“This (MIS)use of my images… with new, fake words put in my mouth, diminishes my opportunities to actually speak my truth,” she wrote.

By late afternoon, the actor confirmed the ad had been pulled. “IT WORKED! YAY INTERNET! SHAME HAS IT’S VALUE!” she posted on Instagram, thanking her supporters.

Google Ramps Up Fight Against AI Deepfakes in Search Results

In its latest bid to curb unauthorized AI-generated deepfakes, Google is taking new steps to remove and demote websites in searches that have been reported to contain illicit images, the technology and search giant said on Wednesday. An AI deepfake is media created using generative AI to produce videos, pictures, or audio clips that appear real. Many of these fake images depict celebrities like actress Scarlett Johansson, politicians like U.S. President Joe Biden, and, more insidiously, children...

Meta has not publicly acknowledged the removal but confirmed to media outlets that the ad had been taken down.

Curtis, best known for her breakout role in John Carpenter’s "Halloween" and more recently for her Oscar-winning performance in "Everything Everywhere All at Once," has had a decades-long career marked by both mainstream visibility and a strong public voice.

AI deepfakes spark concern

Her decision to go public with the appeal comes as concern grows around the unchecked use of generative AI to replicate the identities of real people, often without consent and legal accountability.

In February, Israeli AI artist Ori Bejerano released a video using unauthorized AI-generated versions of Scarlett Johansson, Woody Allen, and OpenAI CEO Sam Altman.

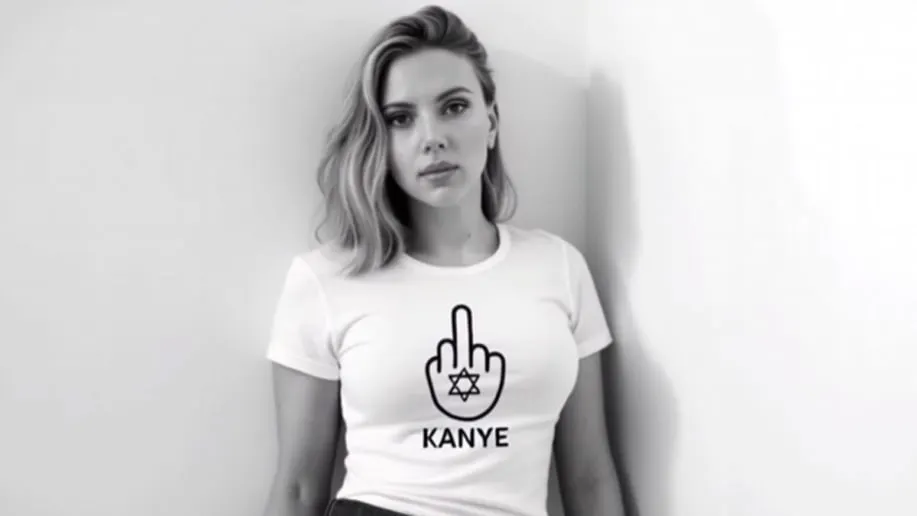

Scarlett Johansson, AI Enthusiasts Slam Anti-Kanye West Video That Used Celeb Deepfakes

An Israeli AI artist's attempt to combat Kanye West's antisemitic posts with non-consensual celebrity deepfakes has drawn sharp criticism from actress Scarlett Johansson and other public figures. Kanye West went into another one of his neo-Nazi streaks last week and posted a lot of antisemitic content on X. It culminated with a predictably bizarre TV ad shown during the Super Bowl in LA for his online clothing shop. Shortly after the ad dropped, all the products were removed from the store excep...

The video, a response to a Super Bowl ad by Kanye West that directed viewers to a website selling a swastika T-shirt, depicted the fake celebrities wearing parody t-shirts featuring Stars of David in a stylized rebuttal to West’s imagery.

“I have no tolerance for antisemitism or hate speech,” Johansson said condemning the video, “but I also firmly believe that the potential for hate speech multiplied by A.I. is a far greater threat than any one person who takes accountability for it.”

Even the wildfires Curtis referenced in her original interview became a target for AI-powered disinformation.

Fabricated images showing the Hollywood Sign engulfed in flames and scenes of mass looting circulated widely on X, formerly Twitter, prompting statements from officials and fact-checkers clarifying that the images were completely false.